BestKet --- Kaan Demirel, Ufuk Bugday

01/31/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

For the this semester we are going to deliver the per plan items via the Gantt chart and we will end up with a program that does:

1) Gets a video stream from the user, detects basketball and human joints

2) Calculates the current factors and returns the information to the user

3) Calculates the suggested factors through animations and returns the information to the user

4) Creates an animation for the best shot formula and returns the information to the user

We updated the Gantt Chart as we spoke. With the new plan, we are going to start implementing detection algorithms by February 1st.

02/07/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-Started implementing the ball detection and tracking algorithms.

-As we planned last semester, we researched findContours function within OpenCV for ball tracking.

-Researched if different lighting will effect the outcome the for the ball tracking.

-findContours gave us enough results to able to move forward. For the next week, we will try to improve our success rate of detection and tracking and we will start reading materials about human joints detection.

02/14/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-While reading on human joints detection methods, we discovered an easier and more effective way of recognizing the body posture. We will use a better observer than a machine, which is a human eye.

-For human joints detection, after the user uploads his/her video, we will open up the first frame and request the needed points like hands, elbow, shoulders, knees etc.

-After getting all the points' coordinates, we will do a multiple point tracking using Blender( might change if we can find a better tool ).

-With Blender, the human joints detection and tracking will be much faster and effective.

-Then, we will pass the data coming from Blender to Unity to continue with animation building processes.

-This method change won't affect our schedule and Gantt Chart will remain the same.

-We are planning to finish ball detection and tracking this week and currently we are on schedule.

02/21/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

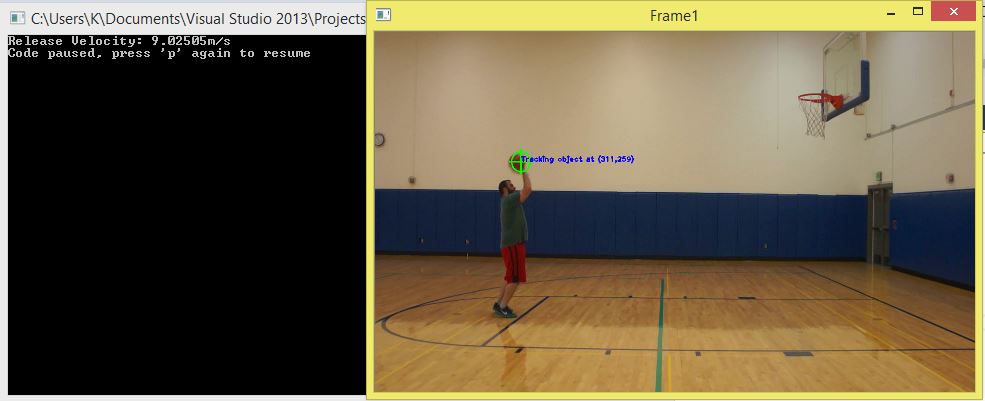

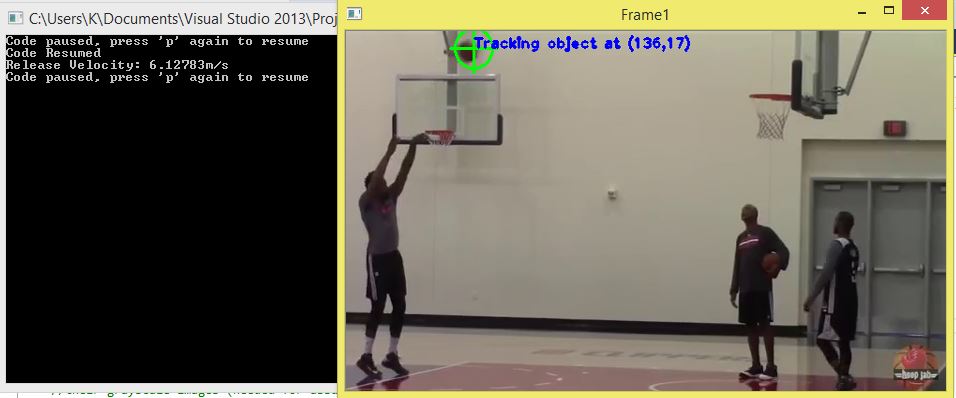

- Minimized noises in our stream to have better ball detection and tracking accuracy.

- Successfully detected and tracked ball on different stream examples.

- Calculated the release velocity of the ball.

- For the next week, here is what we are planning to do:

- - Hoop detection and finding distance between basketball and hoop.

- - Updating UI according to our changes( We need to show the first frame of the video to the user for the coordinates of human joints )

- - Passing the coordinates to Blender.

- We finished working with the basketball and currently we are on schedule.

- We are expecting to finish the human joints detection by mid March.

02/28/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-Rather than showing the first frame to the user, we decided to show the frame where the shooter releases the ball.

-To have better accuracy on ball release detection, we came up with this approach:

- -Stream the video backwards from hoop to the hand

- -Check the coordinates of the ball (x,y)

- -Check if these coordinates are following a parabolic path ( will build a parabolic function based on coordinates )

- -Ball release will happen where the ball deviates from the parabola.

03/06/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-Last week, we worked on our UI design and ball release detection algorithm.

-For ball release detection, we searched for the most efficient way to reverse a video.

- -OpenCV's built-in function does the work, but it eats up a lot of memory. (On Kaan's computer, we got a blue screen of death while trying to reverse a 6 second 4K video.)

- -We might use an outside tool for reversing the video, we are currently searching for ways for integrate these tools into our program.

- -Another option is using the OpenCV's built-in methods. To do so, we need to lower our video resolution and length.

-We updated our UI design.

- -Currently working on the connection between the UI and our C++ application.

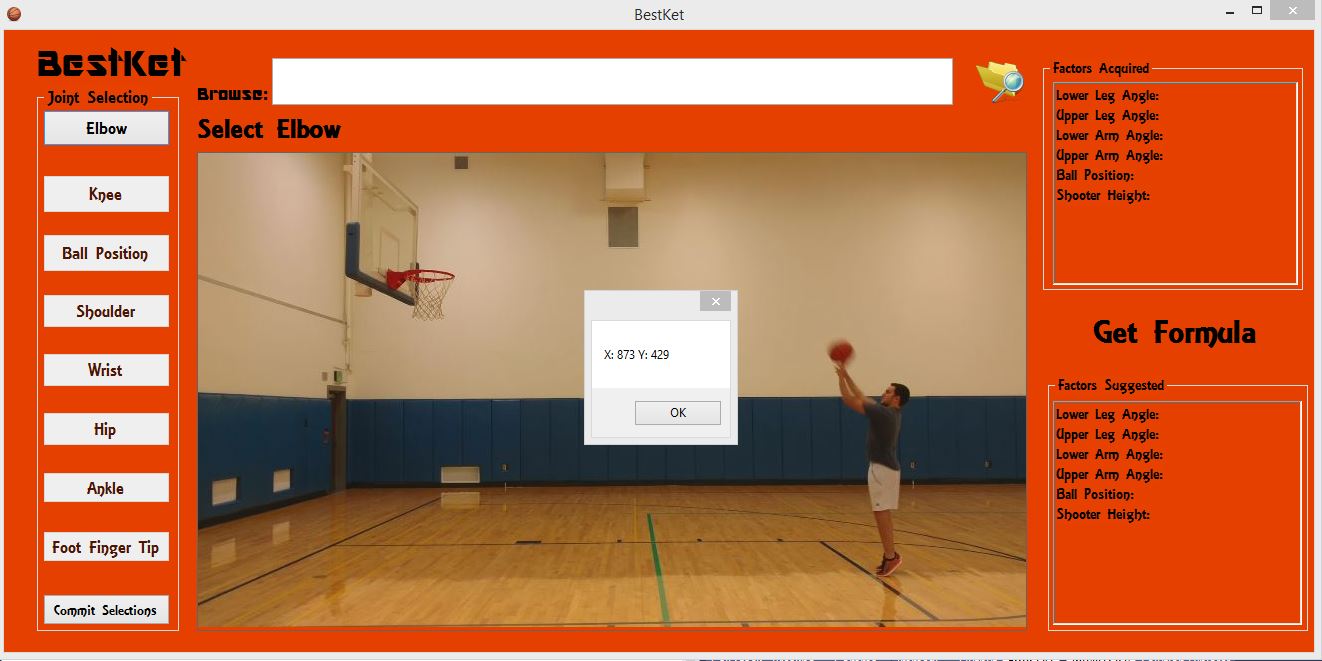

- -New UI looks like this:

-For the next week:

- -Will finish ball release detection.

- -Will finish the connection between UI and C++ application.

- -Show the release frame to the user and let him select the joints and start calculating factors.

- -According to our Gantt Chart, we are on schedule as of now.

03/13/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

03/12 Update:

-Finished the connection between UI and C++ application.

-Used DLLImport to able use our C++ method in C#.

-Built a .DLL for C# which has a method from our C++ application where we do our image processing algorithms.

-The method gets a video file path from C# and returns the frame number where ball release occurs.(Still working on ball release detection)

03/13 Update:

-Ufuk is working on the WinForms/UI side ( showing the frame to the user, letting him select joints )

-Kaan is working on the ball release detection.

- -Found a way to reverse the stream. It still takes some time around 20 seconds for a 5 to 6 seconds of high definition video but it is an acceptable time for now.

- -Tried to put the changing coordinates of the ball into a parabolic function, but it doesn't give expected results.

- -Will work on the algorithm next week, I need to research more on the ball's path on its way to the basket.

03/20/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-We discussed our ball release detection algorithm with Professor Jones.

-We got some results on ball release detection, but the output was not the one we expected.

-We will work on the math side of the algorithm next week to improve results.

-We are also continuing to implement the UI features.

-We expect to complete these two tasks before Spring Break.

-On Spring Break, our plan is to complete all detections and UI features. Then we will start building animations and doing machine learning stuff.

03/27/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

SPRING BREAK

04/04/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-Our ball release detection algorithm success rate is around 50 percent which is not acceptable for now. We will continue to work on the algorithm to improve our accuracy.

-On the UI side, user is now able to select the joints from a given image. This week we will continue by calculating the angles using the given coordinates.

-By the end of this week, we are planning to start working with Unity. Throughout the week, we will research about the communication ways between WinForms and a Unity Project.

04/11/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

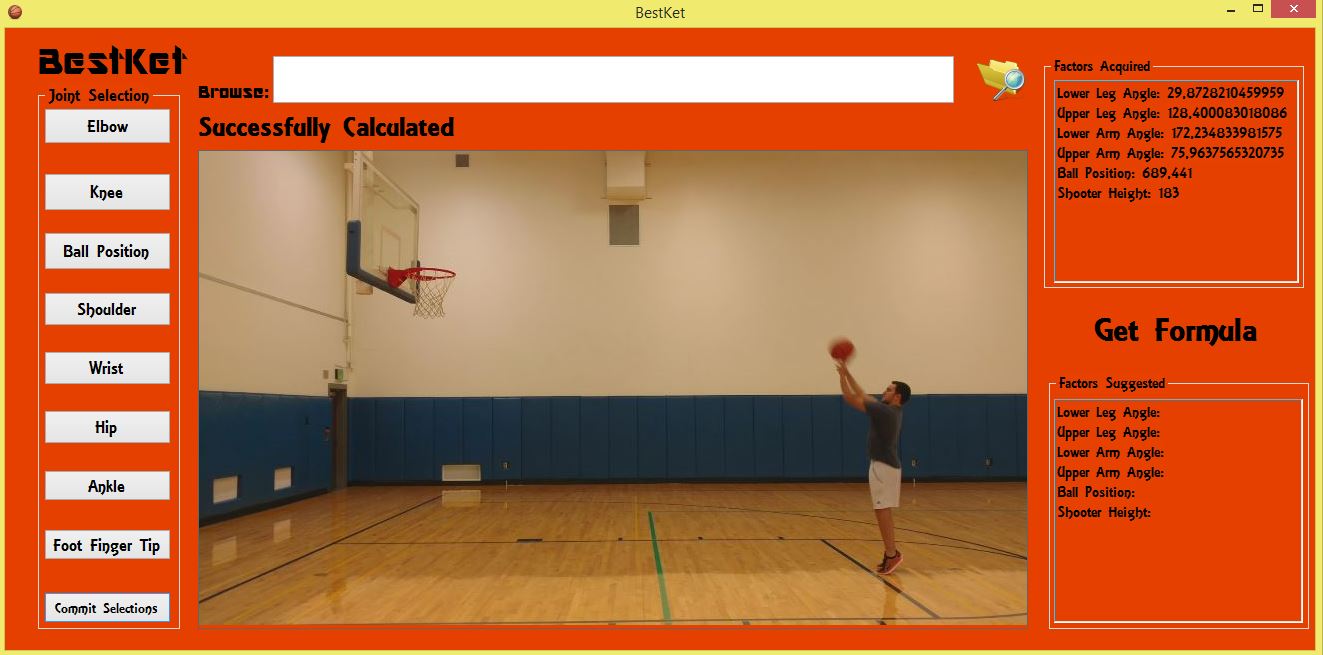

-Done calculating the angles by getting human joint positions from the user.

-Started researching on connecting WinForms and Unity.

-Here is the current look of our UI:

- -User clicks the 'Elbow' button and points the elbow using cursor.

- -User clicks 'Commit Selections' button and results are displayed on the 'Factors Acquired' area.

04/18/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

04/25/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

05/01/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

05/08/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...