Vehware --- Ali Arda Eker

09/14/2016

- CPT form is prepared and submitted.

- Spoke with Mr. Bill (CEO of Vehware) about possible projects of his company which are about visual information processing.

- Spoke with Prof. Yin about his project with Vehware.

09/21/2016

- CPT form is accepted and initialized.

- Prof. Yin approved that I can work in his project with Vehware.

- Bill arranged a meeting for me to speak with a executive from Coca-Cola who is interested in image processing.

- Watched the demo made by Bill to the executive from Coca-Cola about visual information processing.

09/28/2016

- Talked to the Peng Liu who is the phd student of Prof. Yin. We will be working in the eye tracking project together.

- Installed related software and frame work to my pc. OpenCV and visual studio 2015 will be used.

- Sent an e mail to Peng. Waiting to hear from him or Prof. Yin about what is next.

10/05/2016

Goal of the project:

Creating a smart wheel chair that will capture the patient's eye motions to move wheel chair where he or she looks without the need of using hand gestures.

Requirements:

There will be cameras attached to the wheel chair to sense the patient's eye movements. Patient will not need to wear a helmet.

I am assigned to implement adaBoost algorithm for openCV. It is a supervised classification method that combines the performance of many week classifiers instead of a monolithic strong classifier such as SVM or Neural Network. Decision Trees are most popular week classifiers for Adaboost (Adaptive Boosting). Decision Tree means a binary tree (each non-leaf node has 2 child) and for classification, each leaf is marked with a class label. Multiple leaves may have the same label.

Adaboost Model:

Y = F(X)

X(i) ∈ R(k), Y(i) ∈ -1, +1

k = component vector. Each component encodes a vector.

Boosting Types:

1) Two-class Discrete Adaboost (what I will implement)

2) Real Adaboost

3) LogitBoost

4) Gentle Adaboost

10/12/2016

There are 2 main approaches for face detection.

1) Image Based Methods: Use classifiers trained statically with a given example set. Then classifier is scanned through the whole image. Boosting technique will improve classifiers.

2) Feature Based Merhods: Detecting particular face features as eyes, nose, etc.

fn = 1 - d

- Detection Rate (d): Percentage of faces in the image that have been correctly detected. We want to maximize this.

- False Negative (fn): Rate of faces that are forgetton.

- False Positive (fp): Rate of non faces windows that are classified as facesç We want to minimize this.

10/19/2016

- Had a meeting with Bill, Prof. Yin, his phD student Peng and capstone team in ITC building.

- Discussed what will be implemented and what will the project require in general.

- Capstone team consists of 6 undergraduate students and they are responsible for hardware part of the project. They will design the location of the camera and movement of the wheel chair. They will use the signal that we construct using eye movements of patient in order to move the wheel chair.

- We will use the camera and get video stream of the eyes of the patient. Then we will construct a signal which holds the eye direction and movements to be processed in order to move the wheel chair.

- What we will do basically is first capturing the face, then capturing the eyes and movement of the irises in order to generate a signal which indicates where patient looks at and where he or she wants to go. We will then send this information to the hardware to be turned into action.

- At the end of the semester we will have a wheel chair that goes where the patient looks at without the need of using sticks and buttons. There will be a single camera which hangs from a cord that comes across from the patient's head.

- The technology we will be developing further can be used as mood detector of truck drivers to eliminate human factor in collisions.

10/26/2016

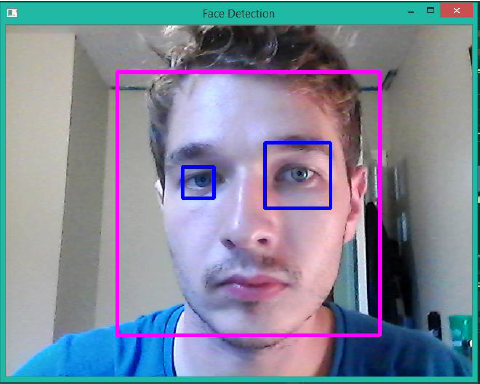

- This week I implemented the face detection program which will be used as a basis of eye movement tracking.

- Using the theories I discussed earlier weeks such as cascade classifiers and adaboost algorithm my program captures the face and eyes within the face as shown below.

- The next step will be the generating of a central point between 2 eyes and calculating the change in x and y direction of eye centars with respect to the center point. Two vectors which will indicate the change in both directions and those will be sent as output signal to the hardware to move the wheel chair.

11/02/2016

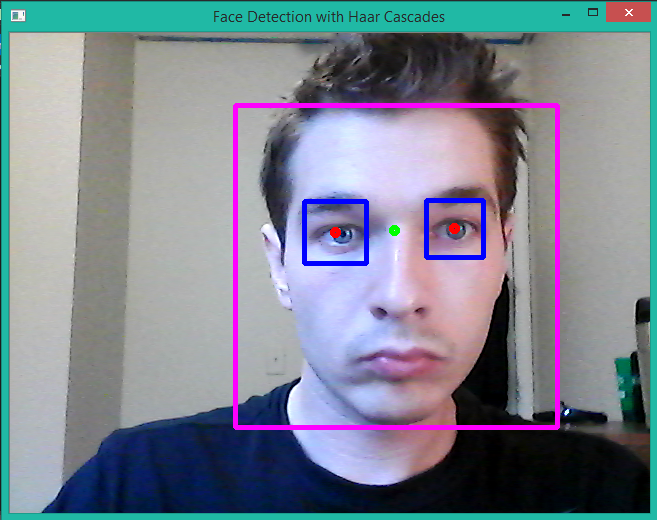

- This week I took the face and eye detection program one step further.

- My program now calculates the centers of the eyes detected and draws a red dot at location of the iris then using these centers it finds the center point between two eyes and draws a ping dot.

- Using this ping dot I will calculate the change in eye centers which will indicate where patient looks.

- I am considering to implement an arrow which tells us the change of eyes of the patient. By this way we will understand which direction patient wants to go or whether he or she wants to slow down or speed up.

- If the patient looks up that will tell us he or she wants to speed up and if looks down, this means we should slow down the wheel chair.

11/09/2016

- We set up a meeting in ITC building next week at 12:00

- We will talk about further details of the project

- All of the people associated with the project will be there such as Capstone team, Bill, Peng and me.

- As I mentioned earlier Capstone team is consist of 6 undergraduate students; 2 of them are computer science, 2 are mechanical engineering and other 2 are electronic engineering students. They will work on the hardware part of the project. They will use signal sent by our program which calculates the direction where user wants to go. They will convert this signal to the action and move the wheel chair.

- We as Professor Lin, his PhD student Peng and me are responsible to write the program which captures the eye movements to calculate the derection where patients wants to go. Software will also sense if the patient wants to stop or move faster. Prof. Lin will be absent for the next week's meeting.

- Bill is the head of the company which we work for. He is mainly responsible of cordinating 2 teams and checking if the requirements are satisfied.

11/16/2016

- This week we had a meeting at ITC building to talk about further specifications and what each team has accomplished so far.

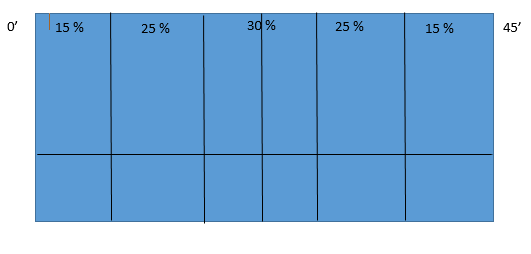

- The capstone team who are responsible of the hardware of the wheel chair showed their design to process signal produced by our program. They divide the patient's vision into 10 parts as shown below. It captures 45 degrees interval of the patient's vision. Each part is diffrent in terms of its width and velocity value. Different velocity values will be assigned to each part. So that if the patient can choose wheel chair's speed or if he or she looks down the wheel chair will be slowed, otherwise it will speed it up.

- I made a demo of my program that calculates the central point between the irises of the patient. I got useful feedbacks from capstone team and Bill that they tell me the program could work a little slower to avoid to move the patient if he or she glances into something for just a couple of seconds. For example the program should discard an event created if the patient looks up a plane or a fly.