Vehware Project: Difference between revisions

No edit summary (change visibility) |

|||

| (66 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

"''Single camera detecting person via OpenCV - |

"''Single camera detecting person via OpenCV - Senior Project for a dual diploma team that would put together a "motion detection" mostly "person motion detection" from a video stream. The goal would be to alert a truck driver when he is backing up that a person is behind the truck. I checked out OpenCV as the basis for this capability using python. OpenCV comes with a body detector and we have used it in some of our work, so adapting it to a student project should be reasonable. The challenge is to get representative and realistic data so that you can define and then estimate an error metric''" |

||

"''Single camera detecting person via OpenCV - Senior Project for a dual diploma team that would put together a "motion detection" mostly "person motion detection" from a video stream. The goal would be to alert a truck driver when he is backing up that a person is behind the truck. I checked out OpenCV as the basis for this capability using python. OpenCV comes with a body detector and we have used it in some of our work, so adapting it to a student project should be reasonable. The challenge is to get representative and realistic data so that you can define and then estimate an error metric''" |

|||

Huseyin M Albayraktaroglu |

|||

== Problem analysis: == |

|||

In this section, we need to investigate and understand the issue deeply. We may be able to answer the questions what is the issue ? what does causes this issue ? |

|||

Okan Gul |

|||

Ahmet M Hacialiefendioglu |

|||

'''Final video''' |

|||

[[File:Side_vehicle_detection.png]] |

|||

https://www.youtube.com/watch?v=9Mh-_dt8fPU |

|||

== Meeting Notes and General Notes == |

|||

'''Week9 ~ October 26 2015: ''' |

|||

@Meeting |

|||

-Talked about latest condition of project |

|||

-Demonstrated a demo code for object detection based on color |

|||

-Supplied a camera to work with |

|||

@General Notes |

|||

-Demo codes are buggy |

|||

-Got advices about Senior project representation |

|||

-Focusing on first 30 second in presentation of the project |

|||

-Focusing on dlib.net and OpenCV object detection libraries |

|||

'''Week10 ~ November 4 2015: ''' |

|||

@Meeting |

|||

-We showed and discussed latest code samples that we have |

|||

-One sample works like detecting initial color to keep track and applies threshold on the picture. After that process it shows results on white object on black background |

|||

-We see that is not good way to proceed on |

|||

-Talked about frame difference comparison and object tracking |

|||

-Talked problems are caused by background movement |

|||

-Talked about whether do we handle the tracking based on direction of the movement |

|||

-Discussed about Optical Flow |

|||

-Discussed about graph that represents different ways of object tracking ( Point tracking, Kernel Tracking, Silhouette Tracking) options |

|||

-Talked about long range tracker topic ( Since it is general term we couldn't find exact documents related with OpenCV object tracking ) |

|||

-Discussed based on relative speed from environment |

|||

-Agreed on move with Optical Flow algorithm to development, we think it solves most of the problems on moving background |

|||

'''Week11 ~ November 18 2015 ''' |

|||

-Talked about similar companies Mobileye ( creating template for object / they are looking and calculating distances / shape recognition based algorithm / more than one algorithms are working ) |

|||

-Talked about ocr systems |

|||

-Blob analysis to keep track of cars (https://www.youtube.com/watch?v=VsN23K7Rzmw) |

|||

-We saw optical flow is not good way to continue |

|||

-Still having problems with moving camera issue ( they lead false alarms ) |

|||

-Checked lectures on Itunes about object tracking |

|||

-Talked about term goal ( at the end of the semester we need to have stable object acquiring and tracking algorithms ) |

|||

-Talked about github projects |

|||

-Talked about extra equipments to work with ( mostly working with linux and unix environment ) |

|||

'''Week12 ~ November 23 2015 ''' |

|||

-Frame difference method gives better results |

|||

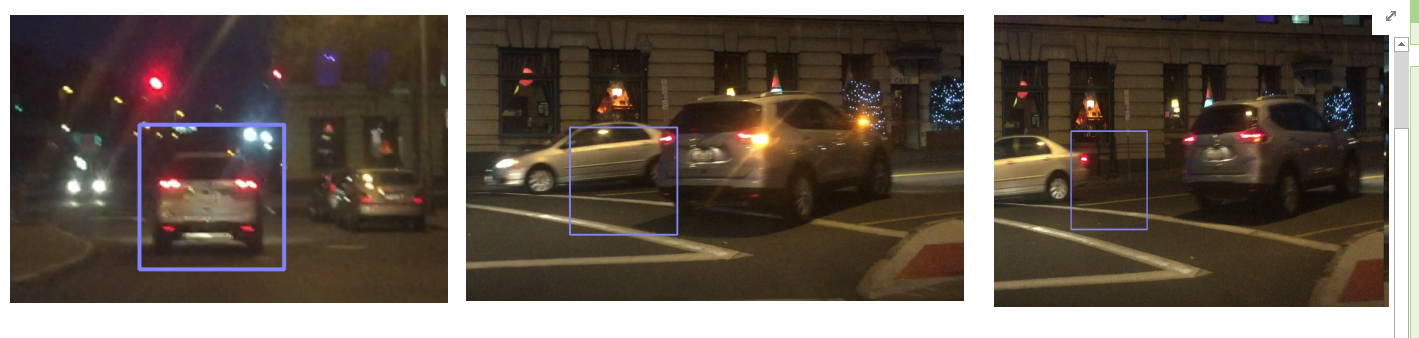

[[File:Results.PNG]] |

|||

object-tracking sample github project |

|||

( buggy code works with template method ) |

|||

[[File:Result2.PNG]] |

|||

-Template correlation algorithm (details going to be add) |

|||

''' December 7 2015 ''' |

|||

- Meeting with bill |

|||

== Updates: == |

|||

updated: '''10/17/2015''' |

|||

- team members will install python and opencv in their machines |

|||

- dlib is need to be studied |

|||

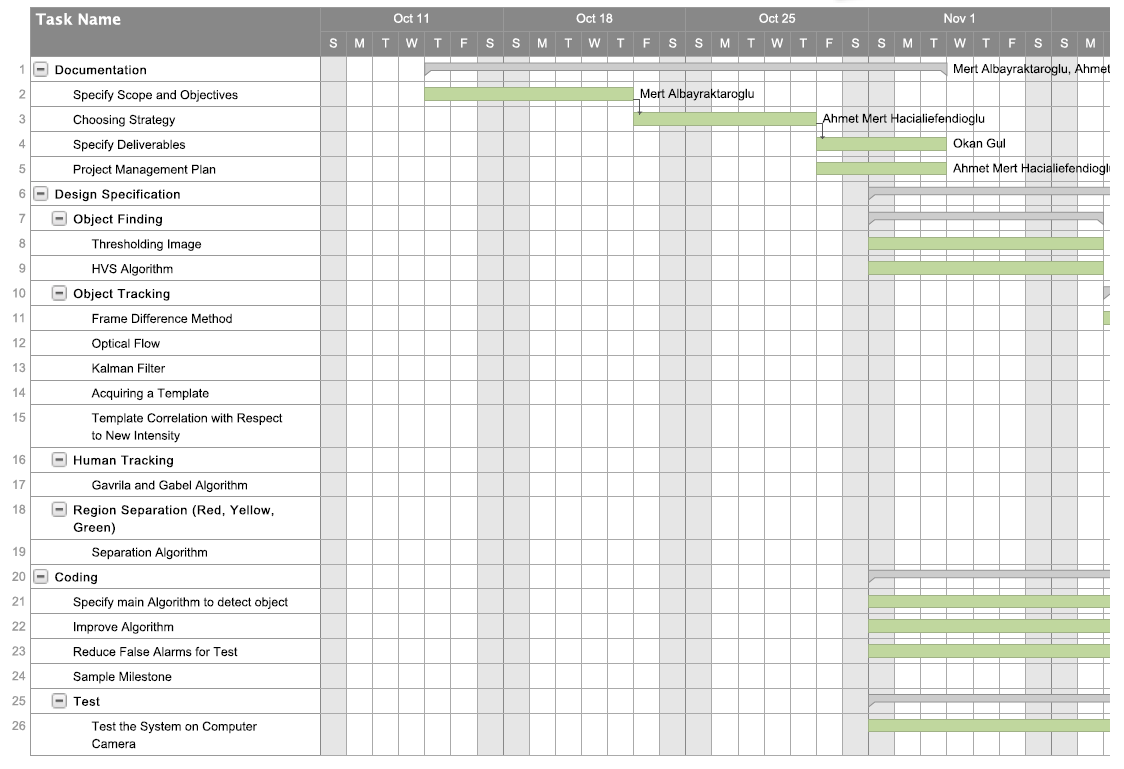

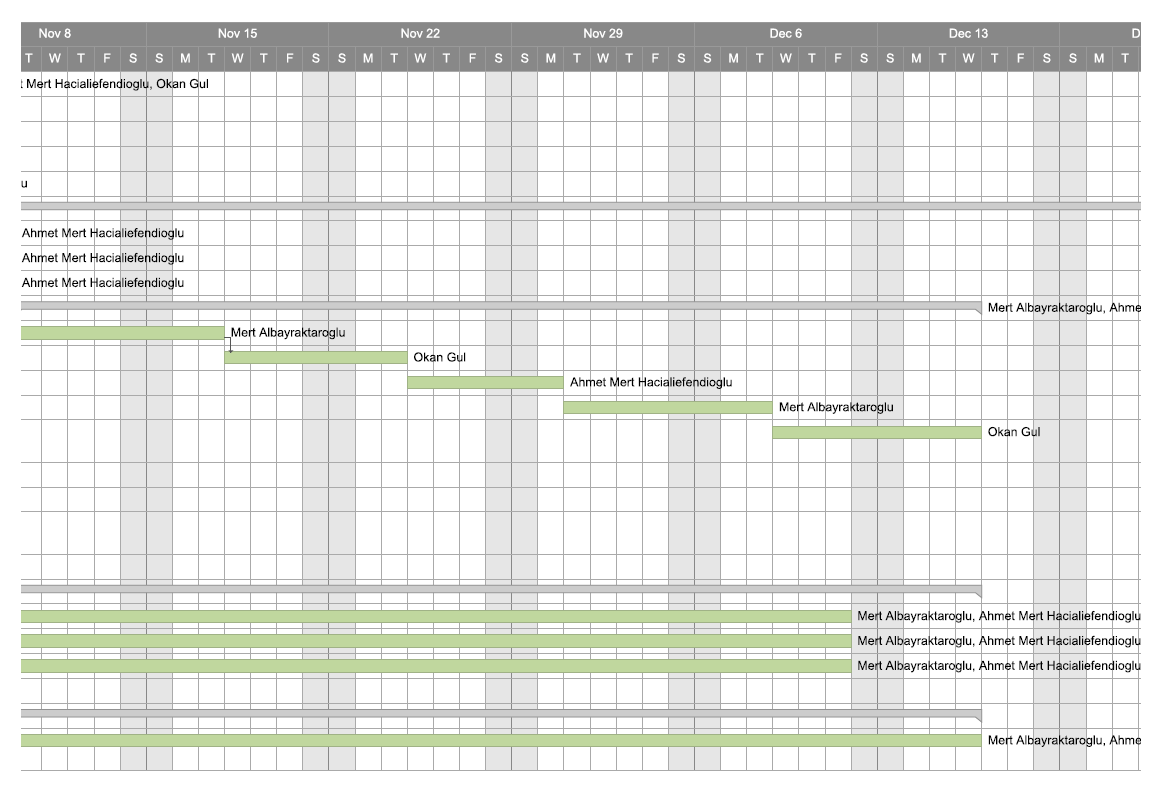

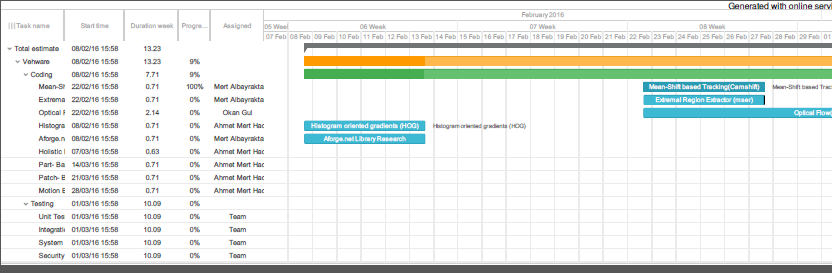

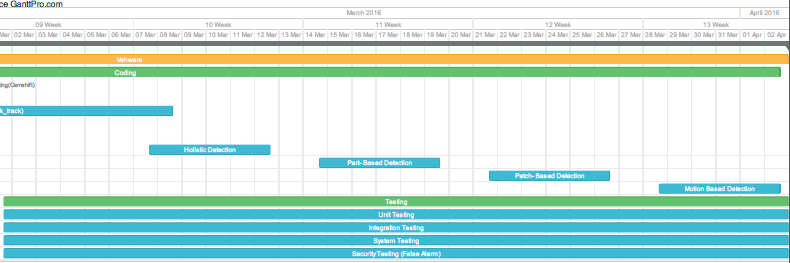

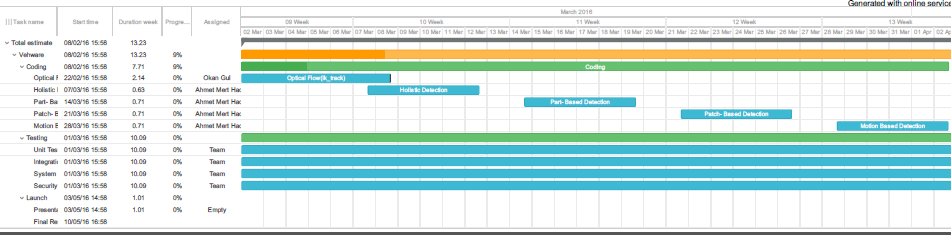

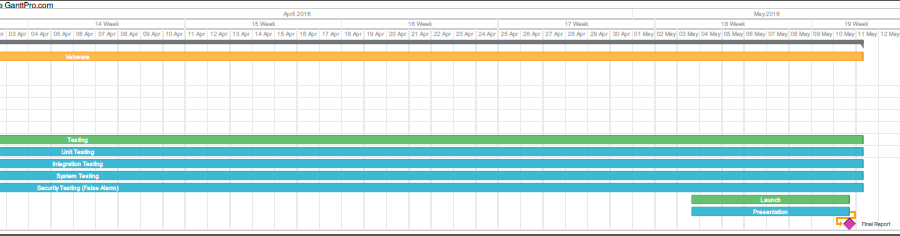

- gantt chart is goingto be added in wiki |

|||

updated: '''10/24/2015''' |

|||

- A camera was prepared by Mr. Bill in order to detect humans easly from a vehicle. The IR filter removed camera has a fisheye lens which has a wider range and closer focus. A sample capture is shown below. |

|||

[[File:WIN_20151024_230249.JPG]] |

|||

- Bill would like the team to focus mostly on object trancking in the first stage rather than detecting humans. |

|||

- Object tracking methods are researched and frame difference algorithm is tested. |

|||

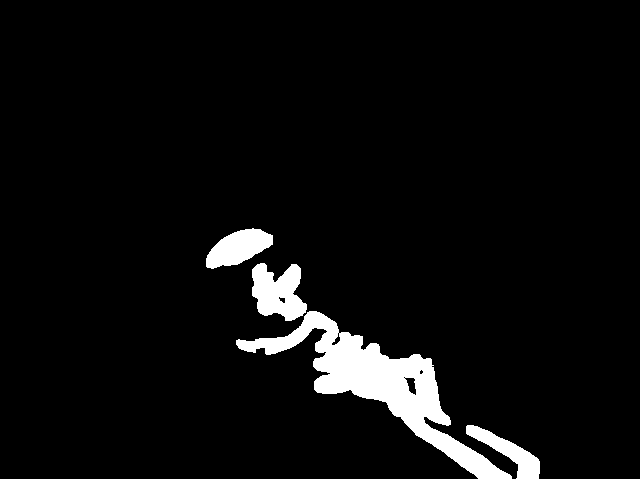

[[File:Capture.png]] [[File:Tresholded_Image.png]] [[File:Treshold_blurred.png]] |

|||

- In this method objects are easly recognized from the tresholded image. Biggest disadvantage of this medhod is not able to get effective result while camera is moving. |

|||

- After the conversation with Prof. Yin, we are advised to consider two algorithm which are Gavrila and Giebel [2002] and Viola et al. [2003]. We will study both paper and share the results in this wikipage. |

|||

updated: '''10/25/2015''' |

|||

- Differetly from frame difference method, today HSV filtering method is used to track object. In this method range of selected object is detected in the colorspace of HVS. Tresholded image only shows the pixels whose colors is close to selected object with respect to HVS space. |

|||

- Issue with this method is even though HSV filtering can takes care with the scenario of mobile camera, since the pedesterians do not always wear the same color, they cannot be detected and classified by their colors. |

|||

[[File:OriginalImage.png]] [[File:TresholdedImage.png]] |

|||

updated: '''10/27/2015''' |

|||

- The gantt chart is updated. |

|||

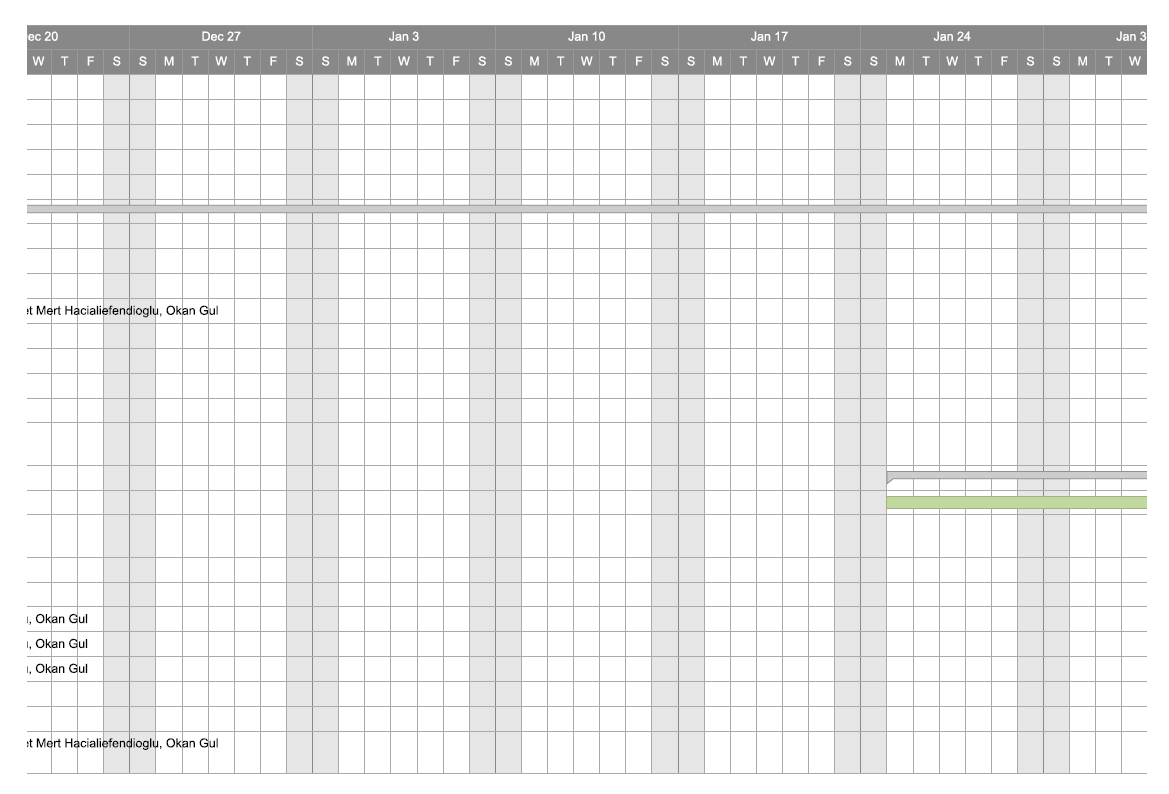

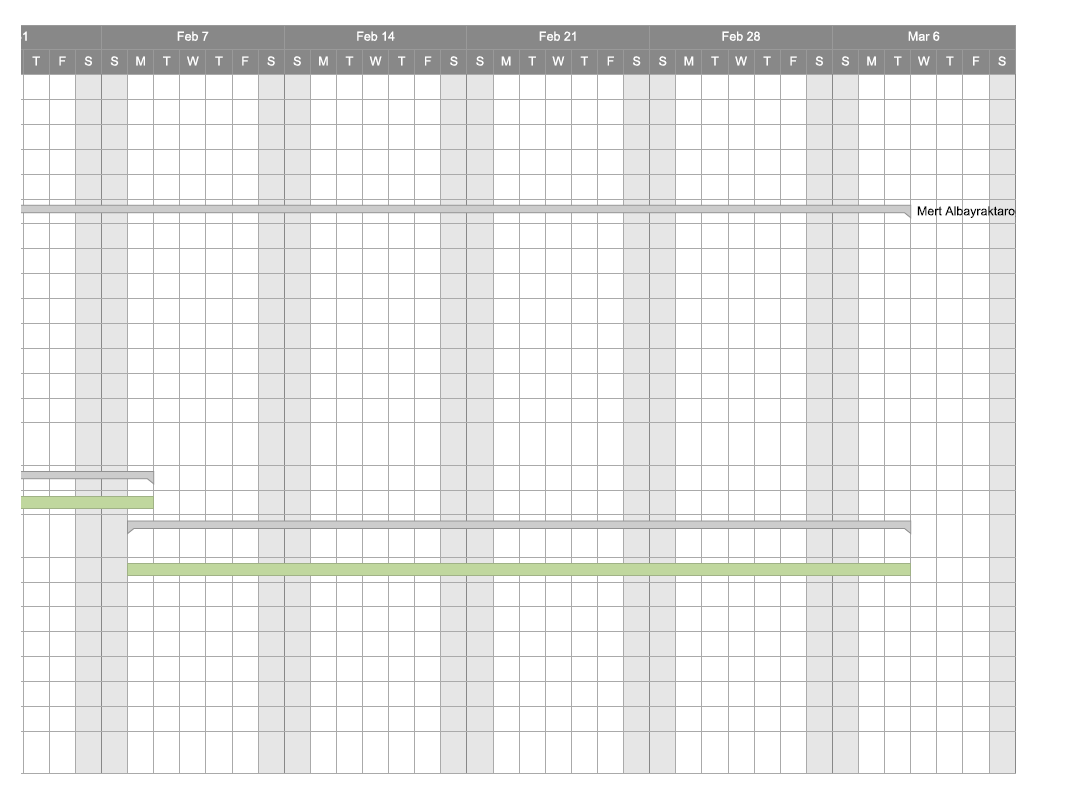

[[File:2015-11-17_14_03_01-Vehware.pdf_-_Adobe_Reader.png]] |

|||

[[File:2015-11-17_14_03_26-Vehware.pdf_-_Adobe_Reader.png]] |

|||

[[File:2015-11-17_14_03_35-Vehware.pdf_-_Adobe_Reader.png]] |

|||

[[File:2015-11-17_14_03_46-Vehware.pdf_-_Adobe_Reader.png]] |

|||

== Project Plan: == |

|||

'''1 DEFINITION''' : |

|||

There are several cases that truck driver hits a worker behind the vehicle. Since trucks are long vehicles and trails of them blocks drivers vision, these kind of accidents becomes inevitable. In order to provide a safe back driving for truck driver, It is thought that setting a mechanism at the back of trails which warn them whether there is a person or not. With this project we as Vehware team, would like to decrease count of live losts and physical damages. |

|||

'''1.1 Project Background and Overview''' |

|||

The primary focus of the project detecting humans behind the truck and warn driver. If the situation becomes too danger, the system may interfere and stop the vehicle. |

|||

A list of prerequisites and key reasons for launch:In order to do that, first goal would be detecting human body. This issue can be solved with several different system developments. First method using intel realsense camera which able to determine distance of a object -human in this case-, wouldn’t needed to implement algorithm for estimating distance between camera and human. At the second method using IR camera to get more clear view. IR cameras are able to detect the temperature of all abject into the vision of the camera. Because it is assumed that in a construction zone, human has the higher temperature, detecting humans must be more straightforward on video stream of IR cameras compared to other cameras. Third option is using kinect. -costly-(explain) |

|||

'''1.2 Business Objectives''' |

|||

Vehvare project will provide team members with experience in professional development process and deliverables. We will apply our knowledge of Python, C++, streaming media, OpenCV, software development and product documentation. We will learn the expectations of the software industry for design, planning and development. In addition, we will learn the expected/appropriate level of timely company communications (ie.. Presentations, status updates, progress to plan, etc ). Our main customers will be companies those use big trucks in their business. |

|||

At the first stage of the project team members agreed on step by step approach while planning and development process. Since project’s main focus is warn truck drivers before dangerous situations those could cause bad results. Our team aims to decrease those problems. The prototype that we produce starts with recognizing people in a stream. After we adjust main structure of the object recognition, we will increase our prototype and we planning to increase analysis on backside danger situations. Those improvements increases safety of the backside of trucks. |

|||

'''1.3 Project Objectives''' |

|||

Short term objectives: Applying an algorithm that detect human in a picture or video stream. It doesn have to be optimized for working on the real trucks. Short term goals are mainly focused on developing a prototype for demonstration and getting investment with it. |

|||

Medium term objectives: Improving the algoritm for tracking humans as well. |

|||

One of the medium term ojvectives would be decreasing false negative events. In this case, false negative event is where output of algorithm failed to find a human in a capture, while there actually is. The algorithm would be more sensitive to find out humans in the camera vision. |

|||

Long term objectives: After human tracking system is developed and tested on trucks, the project would be extented to check other blind spots of truck. The system can warn the driver if any vehicle is traveling into it’s blindspot area.This part of project provides a safe turning to a truck. |

|||

'''1.4 Project Constraints''' |

|||

In our project there are some limitations those may influence how to manage our project and proceed with our project. We can handle our project constraints in several categories. |

|||

Time frames: Our design specifications and simple prototype must be completed by first week December 2015. For fully complete work must be done by end of the May 2016. |

|||

Resources: For beginning we need to simple video recorder or any other device that we can provide us continuous video stream that we can examine on the data coming from the video stream. For early prototype we work on personal phones. For development part three software will work on the project for eight month. |

|||

Activity performance: For Vehware project, all team members have knowledge on basics on image processing and documentation of the project. |

|||

Scope: Scope of the project include almost all dangers that can be caused by people. Since backside of the trucks is almost invisible our early prototype can identify people behind the truck and warn the driver. The later versions of the project will divide backside of the truck into parts ( according to danger probability ) |

|||

Challenges: Lighting conditions, enough equipment, pose |

|||

Cost: Not calculated yet.... |

|||

'''1.5 Project Scope''' |

|||

Steps to detect humans in a video stream: |

|||

According to the article “A survey of techniques for human detection from video by Neeti A. Ogale” humans can be detected based on their feature (shape, color, motion). Two main technique has been presented on article which are “background subtraction” and “direct detection” |

|||

These are background subtraction and direct detection. With the background subtraction method a threshold value is assigned for a picture. In gray scale the pixel values which are above the threshold belongs to object (car, human, signs etc.) and below the threshold belongs to foreground which is unnecessary for this project. Since background subtraction require more steps than just detecting foreground, direct method is only one process to detect human. |

|||

http://www-lb.cs.umd.edu/Grad/scholarlypapers/papers/neetiPaper.pdf |

|||

'''1.6 Project Scope Inclusions''' |

|||

''Histograms of Oriented Gradients for Human Detection: The histogram of oriented gradients (HOG)is a feature descriptor used in computer vision and image processing for the purpose of object detection. The technique counts occurrences of gradient orientation in localized portions of an image. This method is similar to that of edge orientation histograms, scale-invariant feature transform descriptors, and shape contexts, but differs in that it is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy. |

|||

Navneet Dalal and Bill Triggs, researchers for the French National Institute for Research in Computer Science and Automation (INRIA), first described HOG descriptors at the 2005Conference on Computer Vision and Pattern Recognition (CVPR). In this work they focused on pedestrian detection in static images, although since then they expanded their tests to include human detection in videos, as well as to a variety of common animals and vehicles in static imagery.'' |

|||

'''2 APPROACH''' |

|||

'''2.1 Project Strategy''' |

|||

In order to succeed on our project we decided to clarify our goals and plans based on time ( short term and long term plans ). To mention about our basic steps while doing Vehware projects are could be listed below; |

|||

1-Documentation and Planning |

|||

2-Preparing appropriate test materials |

|||

3-Working on human detection algorithms, documentation and articles |

|||

4-Development |

|||

5-Testing |

|||

'''2.2 The Project Schedule''' |

|||

== Posible solutions: == |

== Posible solutions: == |

||

After the problem is analyzed well, we consider possible solutions which on the side of hardware and software. |

After the problem is analyzed well, we consider possible solutions which on the side of hardware and software. |

||

'''Software solutions:''' |

'''Software solutions:''' |

||

<nowiki>intel realsense Camera: able to determine distance of a object, low range, low price considered to kinect. |

|||

The paper[http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1565316&url=http%3A%2F%2Fieeexplore.ieee.org%2Fxpls%2Fabs_all.jsp%3Farnumber%3D1565316] can be a referance since it is demonstrated on a conferance. ''If a paper cited 150 times and pass over 10 years on it, the problem must have been solved'' We should study it well when we access to BU network. |

|||

kinect: :higher range, able to determine movement of a human as default, high price |

|||

double cam: :cheapest solution. |

|||

Infrared cam :reasonable cost $40, easy to detect human, difficult to detect other objects.</nowiki> |

|||

'''Hardware solutions:''' which hardware does fit best for possible solutions ? A camera with image processing or kinect like motion trackers ? can raspbery pi 2 or similar handle these software solutions? |

'''Hardware solutions:''' which hardware does fit best for possible solutions ? A camera with image processing or kinect like motion trackers ? can raspbery pi 2 or similar handle these software solutions? |

||

Input devices: |

|||

[[intel realsense Camera]]: able to determine distance of a object, low range, low price considered to kinect. |

|||

[[kinect:]] :higher range, able to classify movement of a human as default, high price($150) |

|||

[[double cam:]] :cheapest solution. more programming work and CPU power required |

|||

[[Infrared cam]] :reasonable cost $40, easy to detect human, difficult to detect other objects. |

|||

Input devices are listed based on Phd student's suggestions. |

|||

'''01/31/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

. |

|||

-Discussed the CPT process and printed the forms. |

|||

- Attachments A and B are updated for CPT forms |

|||

- CPT forms are prepared |

|||

- Previous Senior Project codes are prepared to send to Merwyn Jones |

|||

-Spoke with Mr. Bill on semester project and he identified a few to-dos to optimize the existing code,also talked about achievements done so far and technologies that are already launched public to get information to lead our ways. |

|||

-Decided the future goals talked about algorithms to use and discussed the pros and cons of them. Also the team wants Mr Bill to find help with asking questions about our problems about our code and algorithms. |

|||

- Detailed new Gantt chart going to be ready and going to be uploaded here based on selected human and car detection algorithms |

|||

-For the this semester we are going to deliver the plan items via the Gantt chart and we will end up with a program that does: |

|||

1 Auto detection of cars |

|||

2 Notification to driver |

|||

3 Danger zones |

|||

4 Human detection and Tracking |

|||

5 Reduce false alarms |

|||

- As a team we make task sharing and start to work on our own goals (like detailed gantt chart, finding and trying human detection algorithms, pedestrian detection with machine learning) |

|||

Huseyin is assigned to research the vehicle detection by plates |

|||

Ahmet : research pedestrian detection using hog, and application of machine learning for object detection |

|||

Okan: study example code of a autonomus RC car |

|||

'''02/07/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

-Ganttchart will be updated upon the decision made on meeting with Mr. Bill |

|||

-CPT forms were signed and handed in to Watson office. |

|||

-During that time team members did their independent researches |

|||

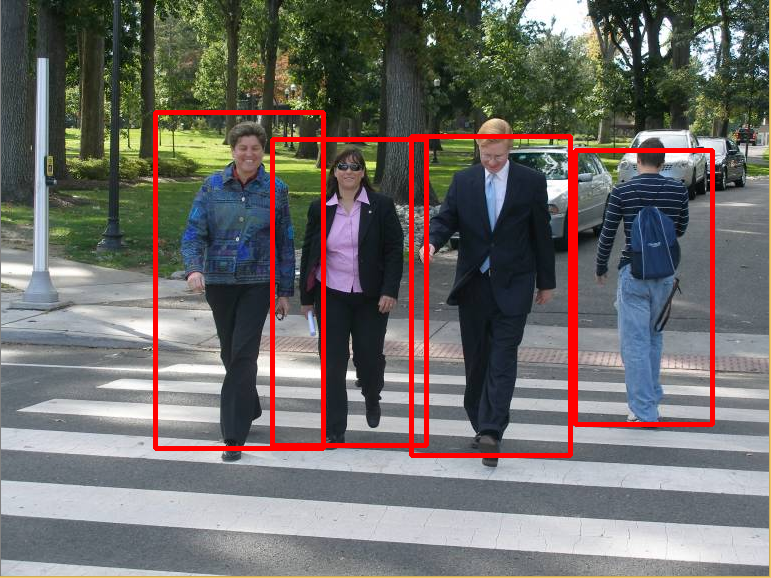

-Histogram oriented gradients method is studied. |

|||

-Histogram oriented gradients (HOG) aimed to used to find "gradient" of a pixel. What the gradient information contains whether the pixel is on an edge line, direction of this edge, and how strong is this edge (src) |

|||

-Human body has a specific shape and can be easily distinguished among other object by its gradient properties. But in order to do that developer needs a pre-trained HOG features. |

|||

[[File:2016-02-07 22 16 07-people detector.png]] |

|||

This photo is the output of the built-in HOG function of OpenCV. While It seems that method gives accurate results, the amount of time that the method spends is too much for a picture. It needs to be reduced. |

|||

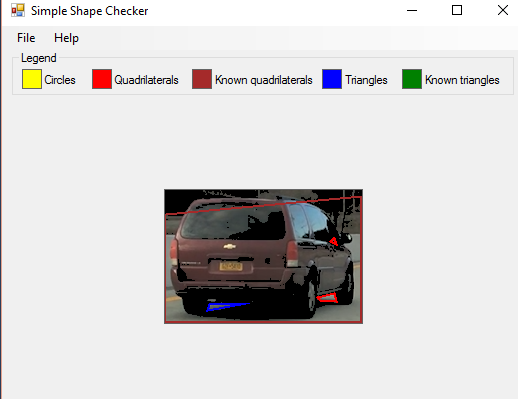

-Detecting cars based on their general properties might be good idea for automatic detection because of that focused on plate recognition |

|||

-Cars has certain shape of plates only difference between them is their colors |

|||

-Detecting vehicles by plates could decrease calculation and make algorithm faster |

|||

-Disadvantage of the plate based recognition of the car is when an out ridden car might be out of range in small distance when we compare with the holistic detection of the car |

|||

-Started to search on Aforge.net library which include faster algorithm on shape recognition. |

|||

-Encountered with significant level of false alarms |

|||

[[File:Shapedetector.png]] |

|||

'''02/14/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

- For this week all team members will search how to capture cars from the sides of the vehicle |

|||

- Based on that search Mr. Bill and team members will decide on concentration topic |

|||

[[File: Task.png]] |

|||

After a meeting we have decided that we will share the task among team member. |

|||

Okan and Huseyin will work on detecting and tracking vehicles at blindspot of a track. |

|||

Ahmet will work on pedestrian detection. |

|||

These two will be our main topic for this semester. |

|||

'''02/21/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

Gantt chart needs to be posted here !... |

|||

Results of meeting with Mr. Bill .. |

|||

Next meeting date with Mr. Bill is 02/22 |

|||

-At the end of this week, we are expected to have a video shot from the back of camera for the testing purposes. |

|||

-HOG will performed on the video stream and the performance of algorithm will be analyzed. |

|||

-A method that measures the distace between pedestrian and vehicle will be initiated to implement. |

|||

Blind Spot Car Detection |

|||

- Mean shift based tracking - camshift |

|||

[[File: first.gif]] |

|||

- Maximally stable external region extraction : The algorithm identifies contiguous sets of pixels whose outer boundary pixel intensities are higher (by a given threshold) than the inner boundary pixel intensities. Such regions are said to be maximally stable if they do not change much over a varying amount of intensities. |

|||

[[File: second.gif]] |

|||

- lk_track :sparse optical flow demo. Uses goodFeaturesToTrack for track initialization and back-tracking for match verification between frames.\ |

|||

[[File: thirdd.gif]] |

|||

Pedestrian detection |

|||

-A video footage was recording for testing purposes. In this video bunch of purple human were released at the back of the vehicle and the video was recorded while the vehicle backing up. (Thanks to Besket team) |

|||

-Opencv Hog pedestrian detection algorithm is performed on the test video. |

|||

- After the function is performed it is seen that amount of time to process a frame is about 3 second. And the false alarm rate is too high. |

|||

- After the meeting with Mr. Bill on 02/22, it is decided that we use the same method on Linux environment with python language. |

|||

[[File:2016-02-22_15_42_43-WIN_20160220_15_22_27_Pro.mp4.png]] |

|||

'''02/28/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

- For this week team members build upon last week's codes blind spot area car tracking and pedestrian tracking |

|||

- Development team received a laptop Mint OS installed in it. (03/03/2016) |

|||

- A list of library will be set up on this machine by the end of this week. (boost, g++, Cmake) |

|||

[[File:2016-03-07_14_58_50-Vehware(2).pdf_-_Adobe_Reader.png]] |

|||

[[File:2016-03-07_15_00_35-Vehware(2).pdf_-_Adobe_Reader.png]] |

|||

[[File:2016-03-07_15_00_48-Vehware-2(1).pdf_-_Adobe_Reader.png]] |

|||

[[File:2016-03-07_15_01_00-Vehware-2(1).pdf_-_Adobe_Reader.png]] |

|||

'''03/06/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

--- For blind spot area car tracking |

|||

--- Team members tried to reduce unrelated tracking points, not found a proper way to reduce unrelated tracking points |

|||

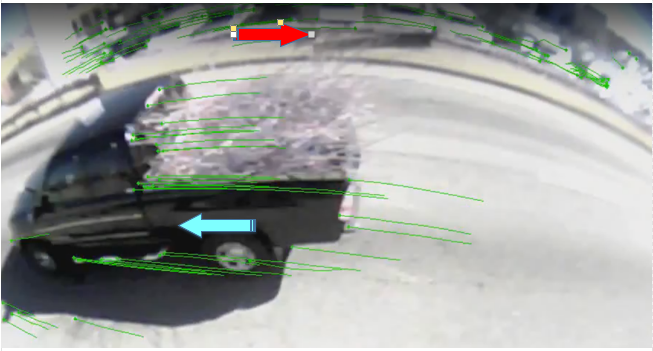

--- Way of moving points different as we see in the picture below. Team members is going to detect reverse point from the normal car direction and erase them. |

|||

--- After removing reverse point we mainly have moving forward points those we need. |

|||

[[File: falsepointt.png]] |

|||

--- Team members find a way to detect a car at blind spot area based on tracking point density (after reduction of the false tracking points) a square will be drawn around the certain points |

|||

--- As we see in the picture after reducing reverse direction points, the rest are mostly belongs to car's points. Detecting that intense point regions will help to detect car. |

|||

[[File: pointdensityyy.png]] |

|||

'''03/13/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

- As we mentioned one week before we achieved to eliminate to points which goes reverse direction flows |

|||

- Code output shows points which are moving same direction with the car which has cam |

|||

- In order to do that we interfered the two dimensional array which holds the tracking points |

|||

- When we increase the speed of the video stream we saw that we lost some major points |

|||

--- the reason for loosing some major points is that since video stream skips some of the frames when we increased the speed of video stream. ( we tried to do that to get more efficient output ) |

|||

--- unfortunately increasing video stream speed doesn't helps to increase efficiency |

|||

[[File: direction.gif]] |

|||

--- as we see in the .gif file first vehicles green tracking tails disappeared after it changed it's direction |

|||

--- next step we will use an algorithm similar to k-means to detect cars and put cars in a rectangular shape |

|||

- found reason of some noises and some false alarms caused from being shaken by road. Shaking camera causes to code output unrelated tracking points |

|||

'''03/20/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...''' |

|||

{| border="1" |

|||

!Github user |

|||

|Language |

|||

|method |

|||

|required Libraries |

|||

|status |

|||

|comments |

|||

|- |

|||

!angus |

|||

|C++ |

|||

|HOG and Haarcascade |

|||

|ROS,OpenCV,Cmake |

|||

|not compiled |

|||

| |

|||

|- |

|||

!dominikz |

|||

|C++,Python |

|||

|? |

|||

|OpenCV,Numpy |

|||

| |

|||

|This is the compact system which is difficult to edit. Because the explanation in the readme file is composed of one line, It is difficult to understand how the code work and what is the input/output type. The application requires a dataset whose format is much diffirent that that we familiar with. |

|||

|- |

|||

!levinJ |

|||

| |

|||

|Laplacian |

|||

|Boost,Cuda,OpenCV,Cmake |

|||

|not compiled |

|||

|The project was divided into sub groups. Each group executes a detection system. Source codes can be compiled via Cmake command. Cmake was sucessfully initialized however, boost library could not be configured. |

|||

|- |

|||

! |

|||

|C++ |

|||

|HOG |

|||

|OpenCV |

|||

|compiled |

|||

|OpenCV default function was compiled and run. The code was modified as that it can get video as input. Even though the code could find pedesterian with high false alarm, processing time was too high. Rechaling may performed. |

|||

|- |

|||

!chhshen |

|||

|C++,Matlab |

|||

|Optical Flow |

|||

| |

|||

|not compiled |

|||

|Because the algorithm is developed as research puposes, input files that they get are too complex(caltech research). Matlab is used for data training . |

|||

|- |

|||

!garystafford |

|||

|C++ |

|||

|blob |

|||

|Opencv,cvBlob |

|||

|not compiled |

|||

|This project requires a library named blobcv. It also provides a shell code that install the libraries. However sh file assumes opencv library is not installed on the OS. Therefore I tried to install only blobcv. It failed. |

|||

|- |

|||

!youxiamotors |

|||

|C++ |

|||

|HOG |

|||

|Opencv |

|||

|not compiled |

|||

|Files in the project are clearly explained. There is also a makefile provided. However when the makefile is run, it cannot compile the code because it cannot link opencv library. We need to figure out how to link opencv library and make the project run |

|||

|} |

|||

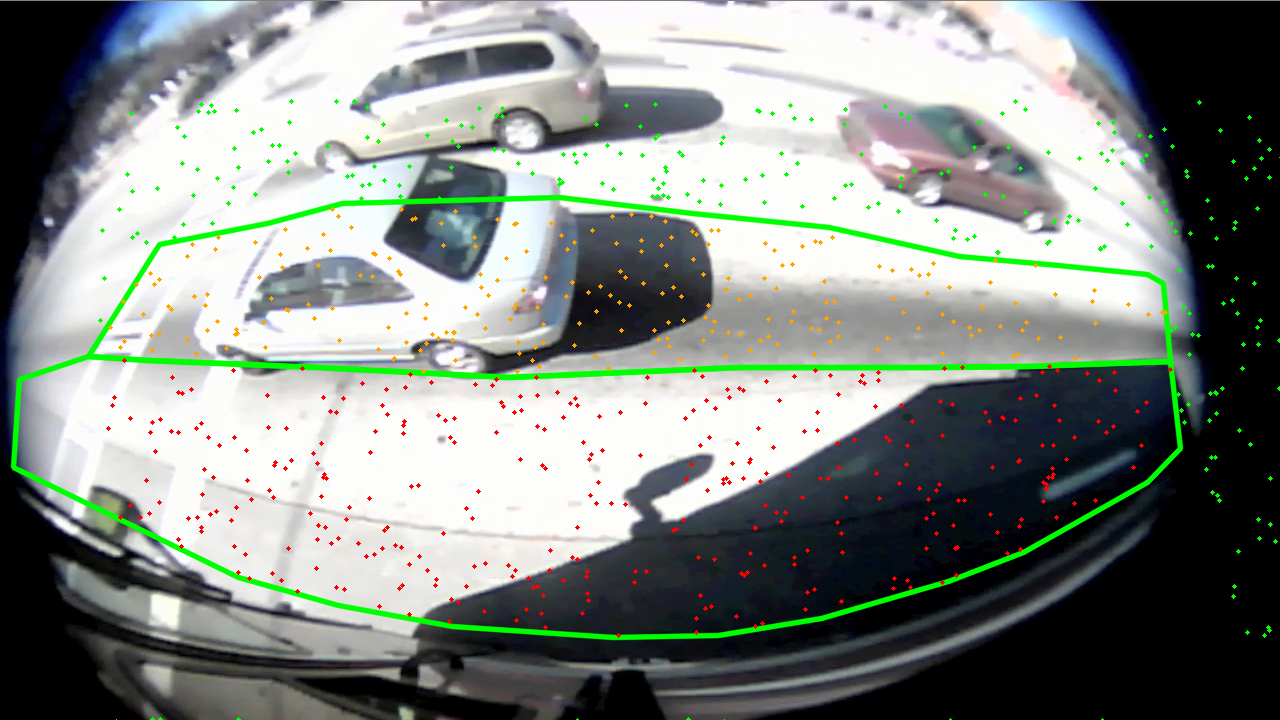

[[File:2016-04-02_11_34_04-Display_window.png]] |

|||

- This video capture divided into three zones which are named as danger zone, warning zone and natural zone.. |

|||

- 1000 points are randomly spreaded on the stream. While the points dropped off danger zone colored red. |

|||

Latest revision as of 17:37, 16 September 2016

"Single camera detecting person via OpenCV - Senior Project for a dual diploma team that would put together a "motion detection" mostly "person motion detection" from a video stream. The goal would be to alert a truck driver when he is backing up that a person is behind the truck. I checked out OpenCV as the basis for this capability using python. OpenCV comes with a body detector and we have used it in some of our work, so adapting it to a student project should be reasonable. The challenge is to get representative and realistic data so that you can define and then estimate an error metric"

"Single camera detecting person via OpenCV - Senior Project for a dual diploma team that would put together a "motion detection" mostly "person motion detection" from a video stream. The goal would be to alert a truck driver when he is backing up that a person is behind the truck. I checked out OpenCV as the basis for this capability using python. OpenCV comes with a body detector and we have used it in some of our work, so adapting it to a student project should be reasonable. The challenge is to get representative and realistic data so that you can define and then estimate an error metric"

Huseyin M Albayraktaroglu

Okan Gul

Ahmet M Hacialiefendioglu

Final video

https://www.youtube.com/watch?v=9Mh-_dt8fPU

Meeting Notes and General Notes

Week9 ~ October 26 2015:

@Meeting

-Talked about latest condition of project

-Demonstrated a demo code for object detection based on color

-Supplied a camera to work with

@General Notes

-Demo codes are buggy

-Got advices about Senior project representation

-Focusing on first 30 second in presentation of the project

-Focusing on dlib.net and OpenCV object detection libraries

Week10 ~ November 4 2015:

@Meeting

-We showed and discussed latest code samples that we have

-One sample works like detecting initial color to keep track and applies threshold on the picture. After that process it shows results on white object on black background

-We see that is not good way to proceed on

-Talked about frame difference comparison and object tracking

-Talked problems are caused by background movement

-Talked about whether do we handle the tracking based on direction of the movement

-Discussed about Optical Flow

-Discussed about graph that represents different ways of object tracking ( Point tracking, Kernel Tracking, Silhouette Tracking) options

-Talked about long range tracker topic ( Since it is general term we couldn't find exact documents related with OpenCV object tracking )

-Discussed based on relative speed from environment

-Agreed on move with Optical Flow algorithm to development, we think it solves most of the problems on moving background

Week11 ~ November 18 2015

-Talked about similar companies Mobileye ( creating template for object / they are looking and calculating distances / shape recognition based algorithm / more than one algorithms are working )

-Talked about ocr systems

-Blob analysis to keep track of cars (https://www.youtube.com/watch?v=VsN23K7Rzmw)

-We saw optical flow is not good way to continue

-Still having problems with moving camera issue ( they lead false alarms )

-Checked lectures on Itunes about object tracking

-Talked about term goal ( at the end of the semester we need to have stable object acquiring and tracking algorithms )

-Talked about github projects

-Talked about extra equipments to work with ( mostly working with linux and unix environment )

Week12 ~ November 23 2015

-Frame difference method gives better results

object-tracking sample github project

( buggy code works with template method )

-Template correlation algorithm (details going to be add)

December 7 2015

- Meeting with bill

Updates:

updated: 10/17/2015

- team members will install python and opencv in their machines

- dlib is need to be studied

- gantt chart is goingto be added in wiki

updated: 10/24/2015

- A camera was prepared by Mr. Bill in order to detect humans easly from a vehicle. The IR filter removed camera has a fisheye lens which has a wider range and closer focus. A sample capture is shown below.

- Bill would like the team to focus mostly on object trancking in the first stage rather than detecting humans.

- Object tracking methods are researched and frame difference algorithm is tested.

- In this method objects are easly recognized from the tresholded image. Biggest disadvantage of this medhod is not able to get effective result while camera is moving.

- After the conversation with Prof. Yin, we are advised to consider two algorithm which are Gavrila and Giebel [2002] and Viola et al. [2003]. We will study both paper and share the results in this wikipage.

updated: 10/25/2015

- Differetly from frame difference method, today HSV filtering method is used to track object. In this method range of selected object is detected in the colorspace of HVS. Tresholded image only shows the pixels whose colors is close to selected object with respect to HVS space.

- Issue with this method is even though HSV filtering can takes care with the scenario of mobile camera, since the pedesterians do not always wear the same color, they cannot be detected and classified by their colors.

updated: 10/27/2015

- The gantt chart is updated.

Project Plan:

1 DEFINITION :

There are several cases that truck driver hits a worker behind the vehicle. Since trucks are long vehicles and trails of them blocks drivers vision, these kind of accidents becomes inevitable. In order to provide a safe back driving for truck driver, It is thought that setting a mechanism at the back of trails which warn them whether there is a person or not. With this project we as Vehware team, would like to decrease count of live losts and physical damages.

1.1 Project Background and Overview

The primary focus of the project detecting humans behind the truck and warn driver. If the situation becomes too danger, the system may interfere and stop the vehicle. A list of prerequisites and key reasons for launch:In order to do that, first goal would be detecting human body. This issue can be solved with several different system developments. First method using intel realsense camera which able to determine distance of a object -human in this case-, wouldn’t needed to implement algorithm for estimating distance between camera and human. At the second method using IR camera to get more clear view. IR cameras are able to detect the temperature of all abject into the vision of the camera. Because it is assumed that in a construction zone, human has the higher temperature, detecting humans must be more straightforward on video stream of IR cameras compared to other cameras. Third option is using kinect. -costly-(explain)

1.2 Business Objectives

Vehvare project will provide team members with experience in professional development process and deliverables. We will apply our knowledge of Python, C++, streaming media, OpenCV, software development and product documentation. We will learn the expectations of the software industry for design, planning and development. In addition, we will learn the expected/appropriate level of timely company communications (ie.. Presentations, status updates, progress to plan, etc ). Our main customers will be companies those use big trucks in their business.

At the first stage of the project team members agreed on step by step approach while planning and development process. Since project’s main focus is warn truck drivers before dangerous situations those could cause bad results. Our team aims to decrease those problems. The prototype that we produce starts with recognizing people in a stream. After we adjust main structure of the object recognition, we will increase our prototype and we planning to increase analysis on backside danger situations. Those improvements increases safety of the backside of trucks.

1.3 Project Objectives

Short term objectives: Applying an algorithm that detect human in a picture or video stream. It doesn have to be optimized for working on the real trucks. Short term goals are mainly focused on developing a prototype for demonstration and getting investment with it.

Medium term objectives: Improving the algoritm for tracking humans as well. One of the medium term ojvectives would be decreasing false negative events. In this case, false negative event is where output of algorithm failed to find a human in a capture, while there actually is. The algorithm would be more sensitive to find out humans in the camera vision.

Long term objectives: After human tracking system is developed and tested on trucks, the project would be extented to check other blind spots of truck. The system can warn the driver if any vehicle is traveling into it’s blindspot area.This part of project provides a safe turning to a truck.

1.4 Project Constraints

In our project there are some limitations those may influence how to manage our project and proceed with our project. We can handle our project constraints in several categories.

Time frames: Our design specifications and simple prototype must be completed by first week December 2015. For fully complete work must be done by end of the May 2016.

Resources: For beginning we need to simple video recorder or any other device that we can provide us continuous video stream that we can examine on the data coming from the video stream. For early prototype we work on personal phones. For development part three software will work on the project for eight month.

Activity performance: For Vehware project, all team members have knowledge on basics on image processing and documentation of the project.

Scope: Scope of the project include almost all dangers that can be caused by people. Since backside of the trucks is almost invisible our early prototype can identify people behind the truck and warn the driver. The later versions of the project will divide backside of the truck into parts ( according to danger probability ) Challenges: Lighting conditions, enough equipment, pose

Cost: Not calculated yet....

1.5 Project Scope

Steps to detect humans in a video stream: According to the article “A survey of techniques for human detection from video by Neeti A. Ogale” humans can be detected based on their feature (shape, color, motion). Two main technique has been presented on article which are “background subtraction” and “direct detection” These are background subtraction and direct detection. With the background subtraction method a threshold value is assigned for a picture. In gray scale the pixel values which are above the threshold belongs to object (car, human, signs etc.) and below the threshold belongs to foreground which is unnecessary for this project. Since background subtraction require more steps than just detecting foreground, direct method is only one process to detect human. http://www-lb.cs.umd.edu/Grad/scholarlypapers/papers/neetiPaper.pdf

1.6 Project Scope Inclusions

Histograms of Oriented Gradients for Human Detection: The histogram of oriented gradients (HOG)is a feature descriptor used in computer vision and image processing for the purpose of object detection. The technique counts occurrences of gradient orientation in localized portions of an image. This method is similar to that of edge orientation histograms, scale-invariant feature transform descriptors, and shape contexts, but differs in that it is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy. Navneet Dalal and Bill Triggs, researchers for the French National Institute for Research in Computer Science and Automation (INRIA), first described HOG descriptors at the 2005Conference on Computer Vision and Pattern Recognition (CVPR). In this work they focused on pedestrian detection in static images, although since then they expanded their tests to include human detection in videos, as well as to a variety of common animals and vehicles in static imagery.

2 APPROACH

2.1 Project Strategy

In order to succeed on our project we decided to clarify our goals and plans based on time ( short term and long term plans ). To mention about our basic steps while doing Vehware projects are could be listed below;

1-Documentation and Planning

2-Preparing appropriate test materials

3-Working on human detection algorithms, documentation and articles

4-Development

5-Testing

2.2 The Project Schedule

Posible solutions:

After the problem is analyzed well, we consider possible solutions which on the side of hardware and software.

Software solutions:

The paper[1] can be a referance since it is demonstrated on a conferance. If a paper cited 150 times and pass over 10 years on it, the problem must have been solved We should study it well when we access to BU network.

Hardware solutions: which hardware does fit best for possible solutions ? A camera with image processing or kinect like motion trackers ? can raspbery pi 2 or similar handle these software solutions? Input devices: intel realsense Camera: able to determine distance of a object, low range, low price considered to kinect. kinect: :higher range, able to classify movement of a human as default, high price($150) double cam: :cheapest solution. more programming work and CPU power required Infrared cam :reasonable cost $40, easy to detect human, difficult to detect other objects.

Input devices are listed based on Phd student's suggestions.

01/31/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc... .

-Discussed the CPT process and printed the forms.

- Attachments A and B are updated for CPT forms

- CPT forms are prepared

- Previous Senior Project codes are prepared to send to Merwyn Jones

-Spoke with Mr. Bill on semester project and he identified a few to-dos to optimize the existing code,also talked about achievements done so far and technologies that are already launched public to get information to lead our ways.

-Decided the future goals talked about algorithms to use and discussed the pros and cons of them. Also the team wants Mr Bill to find help with asking questions about our problems about our code and algorithms.

- Detailed new Gantt chart going to be ready and going to be uploaded here based on selected human and car detection algorithms

-For the this semester we are going to deliver the plan items via the Gantt chart and we will end up with a program that does:

1 Auto detection of cars

2 Notification to driver

3 Danger zones

4 Human detection and Tracking

5 Reduce false alarms

- As a team we make task sharing and start to work on our own goals (like detailed gantt chart, finding and trying human detection algorithms, pedestrian detection with machine learning)

Huseyin is assigned to research the vehicle detection by plates

Ahmet : research pedestrian detection using hog, and application of machine learning for object detection

Okan: study example code of a autonomus RC car

02/07/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

-Ganttchart will be updated upon the decision made on meeting with Mr. Bill

-CPT forms were signed and handed in to Watson office.

-During that time team members did their independent researches

-Histogram oriented gradients method is studied.

-Histogram oriented gradients (HOG) aimed to used to find "gradient" of a pixel. What the gradient information contains whether the pixel is on an edge line, direction of this edge, and how strong is this edge (src)

-Human body has a specific shape and can be easily distinguished among other object by its gradient properties. But in order to do that developer needs a pre-trained HOG features.

This photo is the output of the built-in HOG function of OpenCV. While It seems that method gives accurate results, the amount of time that the method spends is too much for a picture. It needs to be reduced.

-Detecting cars based on their general properties might be good idea for automatic detection because of that focused on plate recognition

-Cars has certain shape of plates only difference between them is their colors

-Detecting vehicles by plates could decrease calculation and make algorithm faster

-Disadvantage of the plate based recognition of the car is when an out ridden car might be out of range in small distance when we compare with the holistic detection of the car

-Started to search on Aforge.net library which include faster algorithm on shape recognition.

-Encountered with significant level of false alarms

02/14/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

- For this week all team members will search how to capture cars from the sides of the vehicle

- Based on that search Mr. Bill and team members will decide on concentration topic

After a meeting we have decided that we will share the task among team member.

Okan and Huseyin will work on detecting and tracking vehicles at blindspot of a track.

Ahmet will work on pedestrian detection.

These two will be our main topic for this semester.

02/21/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

Gantt chart needs to be posted here !...

Results of meeting with Mr. Bill ..

Next meeting date with Mr. Bill is 02/22

-At the end of this week, we are expected to have a video shot from the back of camera for the testing purposes.

-HOG will performed on the video stream and the performance of algorithm will be analyzed.

-A method that measures the distace between pedestrian and vehicle will be initiated to implement.

Blind Spot Car Detection

- Mean shift based tracking - camshift

- Maximally stable external region extraction : The algorithm identifies contiguous sets of pixels whose outer boundary pixel intensities are higher (by a given threshold) than the inner boundary pixel intensities. Such regions are said to be maximally stable if they do not change much over a varying amount of intensities.

- lk_track :sparse optical flow demo. Uses goodFeaturesToTrack for track initialization and back-tracking for match verification between frames.\

Pedestrian detection

-A video footage was recording for testing purposes. In this video bunch of purple human were released at the back of the vehicle and the video was recorded while the vehicle backing up. (Thanks to Besket team)

-Opencv Hog pedestrian detection algorithm is performed on the test video.

- After the function is performed it is seen that amount of time to process a frame is about 3 second. And the false alarm rate is too high.

- After the meeting with Mr. Bill on 02/22, it is decided that we use the same method on Linux environment with python language.

02/28/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

- For this week team members build upon last week's codes blind spot area car tracking and pedestrian tracking

- Development team received a laptop Mint OS installed in it. (03/03/2016)

- A list of library will be set up on this machine by the end of this week. (boost, g++, Cmake)

03/06/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

--- For blind spot area car tracking

--- Team members tried to reduce unrelated tracking points, not found a proper way to reduce unrelated tracking points

--- Way of moving points different as we see in the picture below. Team members is going to detect reverse point from the normal car direction and erase them.

--- After removing reverse point we mainly have moving forward points those we need.

--- Team members find a way to detect a car at blind spot area based on tracking point density (after reduction of the false tracking points) a square will be drawn around the certain points

--- As we see in the picture after reducing reverse direction points, the rest are mostly belongs to car's points. Detecting that intense point regions will help to detect car.

03/13/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

- As we mentioned one week before we achieved to eliminate to points which goes reverse direction flows

- Code output shows points which are moving same direction with the car which has cam

- In order to do that we interfered the two dimensional array which holds the tracking points

- When we increase the speed of the video stream we saw that we lost some major points

--- the reason for loosing some major points is that since video stream skips some of the frames when we increased the speed of video stream. ( we tried to do that to get more efficient output )

--- unfortunately increasing video stream speed doesn't helps to increase efficiency

--- as we see in the .gif file first vehicles green tracking tails disappeared after it changed it's direction

--- next step we will use an algorithm similar to k-means to detect cars and put cars in a rectangular shape

- found reason of some noises and some false alarms caused from being shaken by road. Shaking camera causes to code output unrelated tracking points

03/20/2016 Weekly activities including accomplishments, problems changes to plan, meeting minutes, etc...

| Github user | Language | method | required Libraries | status | comments |

|---|---|---|---|---|---|

| angus | C++ | HOG and Haarcascade | ROS,OpenCV,Cmake | not compiled | |

| dominikz | C++,Python | ? | OpenCV,Numpy | This is the compact system which is difficult to edit. Because the explanation in the readme file is composed of one line, It is difficult to understand how the code work and what is the input/output type. The application requires a dataset whose format is much diffirent that that we familiar with. | |

| levinJ | Laplacian | Boost,Cuda,OpenCV,Cmake | not compiled | The project was divided into sub groups. Each group executes a detection system. Source codes can be compiled via Cmake command. Cmake was sucessfully initialized however, boost library could not be configured. | |

| C++ | HOG | OpenCV | compiled | OpenCV default function was compiled and run. The code was modified as that it can get video as input. Even though the code could find pedesterian with high false alarm, processing time was too high. Rechaling may performed. | |

| chhshen | C++,Matlab | Optical Flow | not compiled | Because the algorithm is developed as research puposes, input files that they get are too complex(caltech research). Matlab is used for data training . | |

| garystafford | C++ | blob | Opencv,cvBlob | not compiled | This project requires a library named blobcv. It also provides a shell code that install the libraries. However sh file assumes opencv library is not installed on the OS. Therefore I tried to install only blobcv. It failed. |

| youxiamotors | C++ | HOG | Opencv | not compiled | Files in the project are clearly explained. There is also a makefile provided. However when the makefile is run, it cannot compile the code because it cannot link opencv library. We need to figure out how to link opencv library and make the project run |

- This video capture divided into three zones which are named as danger zone, warning zone and natural zone.. - 1000 points are randomly spreaded on the stream. While the points dropped off danger zone colored red.