Analyzing

Facial Expressions and Emotions in Three Dimensional Space with Multimodal

Sensing

Introduction

Traditionally, human

facial expressions have been studied using either 2D static images or 2D video

sequences. The 2D-based analysis is difficult to handle large pose variations

and subtle facial behavior. This exploratory research targets the facial

expression analysis and recognition in a 3D space as well as emotion analysis

from multimodal data. The analysis of 3D facial expressions will facilitate the

examination of the fine structural changes inherent in the spontaneous

expressions. The project aims to achieve a high rate of accuracy in identifying

a wide range of facial expressions and emotions, with the ultimate goal of

increasing the general understanding of facial behavior and 3D structure of

facial expressions and emotions on a detailed level. Following databases are released for

public: (1) BU-3DFE (2006), (2) BU-4DFE

(2008), (3) BP4D-Spontaneous (2014), (4) BP4D+ (2016), (5) EB+ (2019), (6)

BU-EEG (2020), (7) BP4D++ (2023), and (8) ReactioNet

(2023)

Project Progress

I. BU-3DFE (

3D facial

models have been extensively used for 3D face recognition and 3D face

animation, the usefulness of such data for 3D facial expression

recognition is unknown. To foster the research in this field, we created a

3D facial expression database (called BU-3DFE database),

which includes 100 subjects with 2500 facial expression models. The BU-3DFE

database is available to the research community (e.g., areas of interest come

from as diverse as affective computing, computer vision, human computer

interaction, security, biomedicine, law-enforcement, and psychology.)

The database presently contains 100 subjects (56% female, 44% male), ranging

age from 18 years to 70 years old, with a variety of ethnic/racial ancestries,

including White, Black, East-Asian, Middle-east Asian, Indian, and Hispanic

Latino. Participants in face scans include undergraduates, graduates and

faculty from our institute’s departments of Psychology, Arts, and Engineering

(Computer Science, Electrical Engineering, System Science, and Mechanical

Engineering). The majority of participants were undergraduates from the

Psychology Department (collaborator: Dr. Peter Gerhardstein).

Each subject performed seven expressions in front of the 3D face scanner. With

the exception of the neutral expression, each of the six prototypic

expressions (happiness, disgust, fear, angry, surprise and sadness)

includes four levels of intensity. Therefore, there are 25 instant 3D

expression models for each subject, resulting in a total of 2,500 3D facial

expression models in the database. Associated with each expression shape model,

is a corresponding facial texture image captured at two views (about +45° and -45°). As a result, the database consists of 2,500 two-view’s

texture images and 2,500 geometric shape models.

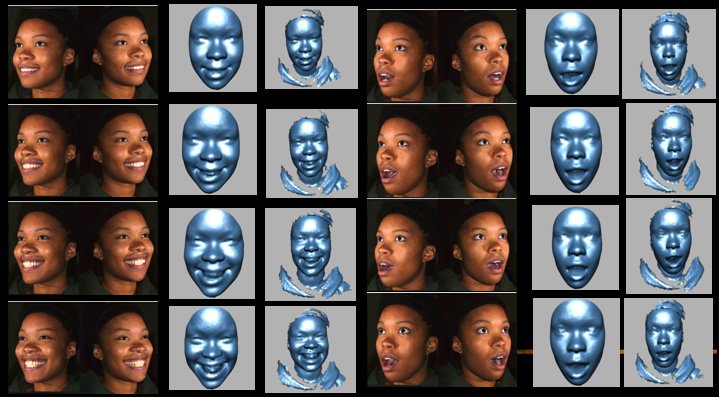

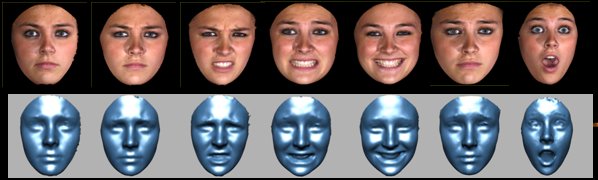

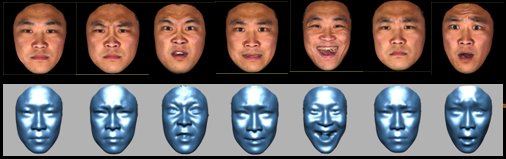

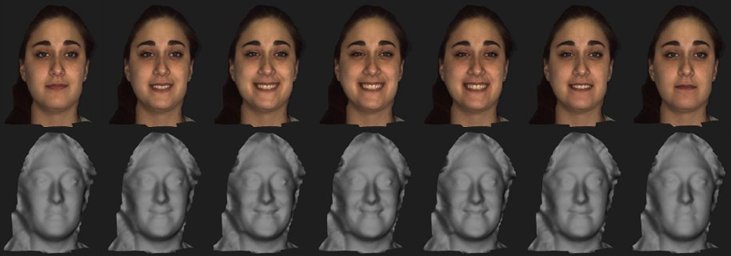

Top: Four levels of facial

expressions from low to high. Expression models show the cropped face region

and the entire facial head; Middle

and Bottom: Seven expressions female and male (neutral, angry, disgust,

fear, happiness, sad, and surprise), with face images and facial models

Facial Expression Recognition Based On BU-3DFE

Database

We

investigated the usefulness of 3D facial geometric shapes to represent and

recognize facial expressions using 3D facial expression range data. We

developed a novel approach to extract primitive 3D facial expression features,

and then apply the feature distribution to classify the prototypic facial

expressions. Facial surfaces are classified by the primitive surface features

based on the surface curvatures. The distribution of these features are used as

the descriptors of the facial surface, which characterize the facial

expression. We conducted the person-independent study to recognize the facial

expression contained in our BU-3DFE Database, the result shows about 83%

correct recognition rate in classifying six universal expressions using LDA

approach.

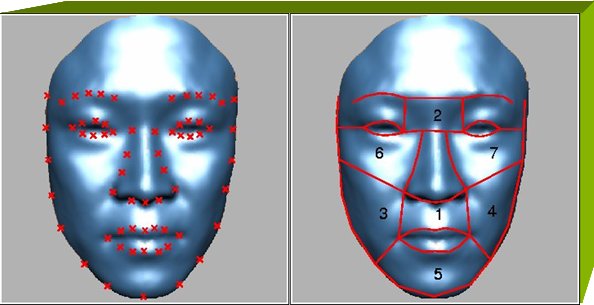

Expressive regions

defined for facial surface primitive feature

Requesting Data (BU-3DFE)

With the agreement of the technology

transfer office of the SUNY at

Note: (1)

Students are not eligible to be a recipient. If you are a student, please

have your supervisor to make a request. (2) Once the agreement form is signed,

we will give access to download the data.

If this data is used, in whole or in

part, for any publishable work, the following paper must be referenced:

”A 3D Facial Expression Database For Facial Behavior Research” by

Lijun Yin; Xiaozhou Wei; Yi Sun; Jun Wang; Matthew J.

Rosato, 7th International Conference on Automatic

Face and Gesture Recognition, 10-12 April 2006 P:211 - 216

II. BU-4DFE (3D + time): A 3D

Dynamic Facial Expression Database (Dynamic Data)

To analyze the facial behavior from a static 3D

space to a dynamic 3D space, we extended the BU-3DFE to the BU-4DFE.

Here we

present a newly created high-resolution 3D dynamic facial expression database,

which is made available to the scientific research community. The 3D facial

expressions are captured at a video rate (25 frames per second). For each

subject, there are six model sequences showing six prototypic facial

expressions (anger, disgust, happiness, fear, sadness, and surprise),

respectively. Each expression sequence contains about 100 frames. The

database contains 606 3D facial expression sequences captured from 101

subjects, with a total of approximately 60,600 frame models. Each 3D model of a

3D video sequence has the resolution of approximately 35,000 vertices. The

texture video has a resolution of about 1040×1329 pixels per frame. The

resulting database consists of 58 female and 43 male subjects, with a variety

of ethnic/racial ancestries, including Asian, Black, Hispanic/Latino, and

White.

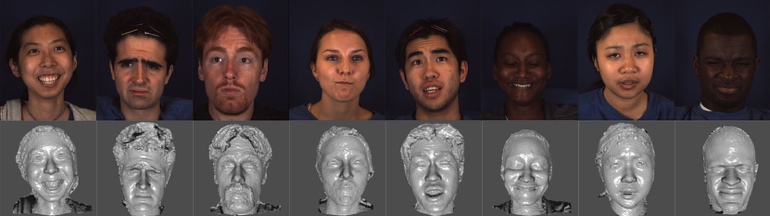

Individual model views

Individual model views

Sample expression model sequences (male and female)

Requesting Data (BU-4DFE)

With the agreement of the technology transfer office of the SUNY at

Note:

(1) Students are not eligible to be a recipient. If you are a student,

please have your supervisor to make a request.

(2) Once a license agreement is signed, we will give access to download the

data.

(3) If this data is used, in whole or in part, for any publishable work,

the following paper must be referenced:

”A High-Resolution 3D Dynamic Facial Expression Database”

by Lijun Yin; Xiaochen Chen; Yi Sun; Tony Worm;

Michael Reale, The 8th International Conference on

Automatic Face and Gesture Recognition, 17-19 September 2008 (Tracking Number:

66)

PI:

Dr. Lijun Yin.

Research

Team: Xiaozhou Wei, Yi Sun, Jun Wang, Matthew Rosato, Myung Jin Ko, Wanqi Tang, Peter Longo, Xiaochen

Chen, Terry Hung, Michael Reale, Tony Worm, and Xing

Zhang.

Collaborator:

Dr. Peter Gerhardstein of Psychology, SUNY Binghamton and his team (Ms. Gina

Shroff).

Related Publications:

·

Lijun Yin, Xiaozhou Wei, Yi Sun, Jun

Wang, and Matthew Rosato, “A 3D Facial Expression

Database For Facial Behavior Research”. The 7th International Conference on

Automatic Face and Gesture Recognition (2006). IEEE Computer Society

TC PAMI. Southampton, UK, April 10-12 2006. p211-216 [PDF]

·

Lijun Yin and Xiaozhou Wei,

“Multi-Scale Primal Feature Based Facial Expression Modeling and

Identification”. The 7th International Conference on Automatic Face and

Gesture Recognition (2006), IEEE Computer Society TC PAMI.

Southampton, UK, April 10-12 2006. p603-608 [PDF]

·

Jun Wang, Lijun Yin, Xiaozhou Wei, and Yi Sun, “3D Facial Expression Recognition

Based on Primitive Surface Feature Distribution”, IEEE International

Conference on Computer Vision and Pattern Recognition (CVPR 2006),

·

L. Yin, X. Wei, P. Longo,

and A. Bhuvanesh, “Analyzing Facial Expressions Using

Intensity-Variant 3D Data for Human Computer Interaction”, 18th

IAPR International Conference on Pattern Recognition (ICPR 2006), Hong

Kong. p1248 – 1251. (Best Paper Award) [PDF]

·

Y. Sun and L. Yin, “Evaluation of

3D Facial Feature Selection for Individual Facial Model Identification”, 18th

IAPR International Conference on Pattern Recognition (ICPR 2006), Hong

Kong. p562- 565. [PDF]

·

J. Wang and L. Yin, “Static

Topographic Modeling for Facial Expression Recognition and Analysis”, Computer

Vision and Image Understanding, Elsevier Science. Nov. 2007. p19-34. [PDF]

·

L. Yin, X. Chen, Y. Sun, T. Worm,

and M. Reale, “A High-Resolution 3D Dynamic Facial

Expression Database”, The 8th International Conference on Automatic

Face and Gesture Recognition (2008), 17-19 September 2008 (Tracking Number: 66).

IEEE Computer Society TC PAMI. Amsterdam, The Netherlands. [PDF]

·

Y. Sun and L. Yin, “Facial

Expression Recognition Based on 3D Dynamic Range Model Sequences". The

10th European Conference on Computer Vision (ECCV08), October 12-18, 2008, Marseille,

France. [PDF]

III. BP4D-Spontaneous:

Binghamton-Pittsburgh 3D Dynamic Spontaneous Facial Expression Database

Because posed and un-posed (aka

“spontaneous”) 3D facial expressions differ along several dimensions including

complexity and timing, well-annotated 3D video of un-posed facial behavior is

needed. We present a newly developed 3D video database of spontaneous facial

expressions in a diverse group of young adults. Well-validated emotion

inductions were used to elicit expressions of emotion and paralinguistic

communication. Frame-level ground-truth for facial actions was obtained using

the Facial Action Coding System. Facial features were tracked in both 2D and 3D

domains using both person-specific and generic approaches. The work promotes

the exploration of 3D spatiotemporal features in subtle facial expression,

better understanding of the relation between pose and motion dynamics in facial

action units, and deeper understanding of naturally occurring facial action.

The

database includes forty-one participants (23 women, 18 men). They were 18 – 29

years of age; 11 were Asian, 6 were African-American, 4 were Hispanic, and 20

were Euro-American. An emotion

elicitation protocol was designed to elicit emotions of participants

effectively. Eight tasks were covered with an interview process and a series of

activities to elicit eight emotions.

The

database is structured by participants. Each participant is associated with 8

tasks. For each task, there are both 3D and 2D videos. As well, the Metadata

include manually annotated action units (FACS AU), automatically tracked head

pose, and 2D/3D facial landmarks. The

database is in the size of about 2.6TB (without compression).

Sample

Expressions for Eight Tasks

Requesting Data (BP4D-Spontaneous)

With the agreement of the licensing office of the Binghamton University and

Pittsburgh University, the database is available for use by external parties.

Due to agreements signed by the volunteer models, a written agreement must

first be signed by the recipient and the research administration office

director of your institution before the data can be provided. Furthermore, the

data will be provided to parties who are pursuing research for non-profit use.

To make a request for the data, please contact Dr.

Lijun Yin at lijun@cs.binghamton.edu. To make a

request for the data, please contact Dr.

Lijun Yin at lijun@cs.binghamton.edu. For any

profit/commercial use of such data, please also contact Dr. Lijun Yin and Ms.

Amy Breski <abreski@binghamton.edu> and

<innovation@binghamton.edu> of the Office of Technology Licensing

and Innovation Partnerships.

Note:

(1) Students are not eligible to be a recipient. If you are a student,

please have your supervisor to make a request.

(2) Once a license agreement is signed, we will give access to download the

data.

(3) If this data is used, in whole or in part, for any publishable work,

the following paper(s) must be referenced:

· Xing Zhang, Lijun Yin, Jeff Cohn, Shaun

Canavan, Michael Reale, Andy Horowitz, Peng Liu, and Jeff

Girard, “BP4D-Spontaneous: A high resolution spontaneous 3D dynamic facial

expression database”, Image and Vision Computing, 32 (2014), pp. 692-706 (special issue of the Best of FG13)

· Xing Zhang, Lijun Yin, Jeff Cohn, Shaun

Canavan, Michael Reale, Andy Horowitz, and Peng Liu,

“A high resolution spontaneous 3D dynamic facial expression database”, The 10th

IEEE International Conference on Automatic Face and Gesture Recognition

(FG13), April, 2013.

Research Team:

Binghamton

University: Lijun Yin, Xing Zhang, Andy Horowitz, Michael Reale,

Peter Gerhardstein, Shaun Canavan, and Peng Liu

University

of Pittsburgh: Jeff Cohn, Jeff Girard, and Nicki Siverling

Related Publications:

· X.

Zhang, L. Yin, J. Cohn, S. Canavan, M. Reale, A.

Horowitz, and P. Liu, “A high resolution spontaneous 3D dynamic facial

expression database”, The 10th

IEEE International Conference on Automatic Face and Gesture Recognition (FG13), April, 2013.

· Xing Zhang, Lijun Yin, Jeff Cohn, Shaun

Canavan, Michael Reale, Andy Horowitz, Peng Liu, and

Jeff Girard, “BP4D-Spontaneous: A high resolution spontaneous 3D dynamic facial

expression database”, Image and Vision Computing, 32 (2014), pp. 692-706 (special issue of the Best of FG13)

· M. Reale, X. Zhang, L. Yin, "Nebula Feature: A Space-Time

Feature for Posed and Spontaneous 4D Facial Behavior Analysis", The10th IEEE International Conference on

Automatic Face and Gesture Recognition (FG13), April, 2013.

· S. Canavan, X. Zhang,

and L. Yin, “Fitting and tracking 3D/4D facial data using a temporal deformable

shape model”, IEEE International

Conference on Multimedia and Expo. (ICME13), 2013.

· P. Liu, M. Reale, and L. Yin, “Saliency-guided 3D head pose estimation

on 3D expression models”, the 15th

ACM International Conference on Multimodal Interaction (ICMI), 2013.

· S. Canavan, Y. Sun, L. Yin, "A Dynamic Curvature Based Approach For

Facial Activity Analysis in 3D Space", IEEE

CVPR Workshop on Socially Intelligent Surveillance and Monitoring (SISM) at CVPR in June 2012.

· P. Liu, M. Reale, and L. Yin, “3D

head pose estimation based on scene flow and a 3D generic head model”, IEEE International conference on Multimedia

and Expo (ICME 2012), July, 2012

IV.

Multimodal Spontaneous Emotion database (BP4D+)

The

“BP4D+”, extended from the BP4D

database, is a Multimodal Spontaneous Emotion Corpus (MMSE), which contains

multimodal datasets including synchronized 3D, 2D, thermal, physiological data

sequences (e.g., heart rate, blood pressure, skin conductance (EDA), and

respiration rate), and meta-data (facial features and FACS codes).

There

are 140 subjects, including 58 males and 82 females, with ages ranging from 18

to 66 years old. Ethnic/Racial Ancestries include Black, White, Asian

(including East-Asian and Middle-East-Asian), Hispanic/Latino, and others

(e.g., Native American). With 140 subjects and 10 tasks (emotions) for each

subject included in the database, there are over 10TB high quality data generated

for the research community.

Paper citation:

· Zheng

Zhang, Jeff Girard, Yue Wu, Xing Zhang, Peng Liu, Umur

Ciftci, Shaun Canavan, Michael Reale,

Andy Horowitz, Huiyuan Yang, Jeff Cohn, Qiang Ji, and Lijun Yin, Multimodal Spontaneous Emotion

Corpus for Human Behavior Analysis, IEEE International Conference on Computer Vision and

Pattern Recognition (CVPR)

2016.

Research Team:

· Lijun

Yin, Jeff Cohn, Qiang Ji, Andy Horowitz, and Peter

Gerhardstein

Related Publications:

·

Shaun

Canavan, Peng Liu, Xing Zhang, and Lijun Yin, Landmark Localization on 3D/4D

Range Data Using a Shape Index-Based Statistical Shape Model with Global and

Local Constraints, Computer Vision and

Image Understanding (Special issue on Shape Representations Meet Visual

Recognition), Vol. 139, Oct. 2015, p136-148, Elsevier.

·

Zheng

Zhang, Jeff Girard, Yue Wu, Xing Zhang, Peng Liu, Umur

Ciftci, Shaun Canavan, Michael Reale,

Andy Horowitz, Huiyuan Yang, Jeff Cohn, Qiang Ji, and Lijun Yin, Multimodal Spontaneous Emotion

Corpus for Human Behavior Analysis, IEEE International Conference on Computer Vision and

Pattern Recognition (CVPR)

2016.

·

Laszlo

Jeni, Jeff Cohn, and Takeo Kanade, Dense 3D face alignment

from 2D videos in real-time, IEEE

International Conference on Automatic Face and Gesture Recognition (FG),

2015. (Best Paper Award of FG 2015)

·

Yue

Wu and Qiang Ji, "Constrained Deep Transfer

Feature Learning and its Applications", IEEE Conference on Computer Vision and Pattern Recognition (CVPR),

2016

·

Peng

Liu and Lijun Yin, “Spontaneous Facial Expression Analysis Based on Temperature

Changes and Head Motions”, The 11th

IEEE International Conference on Automatic Face and Gesture Recognition (FG15),

2015

·

Xing

Zhang, Lijun Yin, and Jeff Cohn, Three Dimensional Binary Edge Feature

Representation for Pain Expression Analysis, The 11th IEEE International Conference on Automatic Face and

Gesture Recognition (FG15), 2015

·

Sergey

Tulyakov, Xavier Alameda-Pineda, Elisa Ricci, Lijun

Yin, Jeff Cohn, Nicu Sebe,

Self-Adaptive Matrix Completion for Heart Rate Estimation from Face Videos

under Realistic Conditions, IEEE International Conference on Computer Vision and

Pattern Recognition (CVPR)

2016.

·

Xing

Zhang, Zheng Zhang, Dan Hipp, Lijun Yin, and Peter

Gerhardstein, “Perception Driven 3D Facial Expression Analysis Based on Reverse

Correlation and Normal Component”, AAAC 6th

International Conference on Affective Computing and intelligent Interaction

(ACII 2015), Sept. 2015

·

Shaun Canavan, Lijun Yin, Peng Liu, and Xing Zhang, “Feature

Detection and Tracking on Geometric Mesh Data Using a Combined Global and Local

Shape Model for Face and Facial Expression Analysis” International Conference of Biometrics, Theory, Applications and

Systems, (BTAS’15), Sept., 2015

Requesting Data (BP4D+)

With the agreement of the licensing office of the Binghamton University, Pittsburgh

University, and RPI, the database is available for use by external parties. Due

to agreements signed by the volunteer models, a written agreement must first be

signed by the recipient and the research administration office director of your

institution before the data can be provided. Furthermore, the data will be

provided to parties who are pursuing research for non-profit use. To make a

request for the data, please contact Dr.

Lijun Yin at lijun@cs.binghamton.edu. For any

profit/commercial use of such data, please also contact Dr. Lijun Yin and Ms.

Amy Breski <abreski@binghamton.edu> and

<innovation@binghamton.edu> of the Office of Technology Licensing

and Innovation Partnerships.

Note:

(1) Students are not eligible to be a recipient. If you are a student,

please have your supervisor to make a request.

(2) Once the license agreement is done, we will request you to provide an

external portable hard-drive for copying the database and will ship it back to

you afterwards. The data size is less than 6TB after the files are compressed.

V. EB+

Dataset -- Expanded BP4D+

The “EB+”, expanded from BP4D+, contains 60 subjects

with 2D videos and FACS annotations of facial expressions.

Paper citation:

· Itir Onal Ertugrul, Jeff Cohn, Laszlo Jeni, Zheng Zhang, Lijun Yin,

and Qiang Ji,

Cross-domain AU detection: domains, learning, approaches, and measures, IEEE International

Conference on Automatic Face and Gesture Recognition (FG), 2019.

Research Team:

· Lijun

Yin, Jeff Cohn, Qiang Ji, Laszlo Jeni, Andy Horowitz,

and Peter Gerhardstein

Related Publications:

·

I. Ertugrul, J. Cohn, L.

Jeni, Z. Zhang, L. Yin, and Q. Ji, Cross-domain AU Detection: Domains,

Learning Approaches, and Measures, IEEE Transactions on Biometrics, Behavior,

and Identity Science, Vol. 2, Issue

2, pp.158-171, April, 2020. (Special issue of the Best of FG 2019)

Requesting Data (EB+)

With the agreement of the licensing office

of the Binghamton University, Pittsburgh University, and RPI, the database is

available for use by external parties. Due to agreements signed by the

volunteer models, a written agreement must first be signed by the recipient and

the research administration office director of your institution before the data

can be provided. Furthermore, the data will be provided to parties who are

pursuing research for non-profit use. To make a request for the data, please contact

Dr.

Lijun Yin at lijun@cs.binghamton.edu. For any

profit/commercial use of such data, please also contact Dr. Lijun Yin and Ms.

Amy Breski <abreski@binghamton.edu> and

<innovation@binghamton.edu> of the Office of Technology Licensing

and Innovation Partnerships.

Note: Students are not eligible to be a recipient.

If you are a student, please have your supervisor to make a request.

VI.

BU-EEG multimodal facial action database

The “BU-EEG” multimodal facial action database

records the EEG signals and face videos of both posed facial actions and

spontaneous expressions from 29 participants with different ages, genders,

ethnic backgrounds. There are three sessions in the experiment for simultaneous

collection of EEG signals (with 128 location channels) and facial action

videos, including posed expressions, action units, and spontaneous emotions,

respectively. A total of 2,320 experiment trails were recorded:

·

Session 1: Six prototypical facial expressions videos (e.g.,

anger, disgust, fear, happiness, sadness, and surprise) and the corresponding

128-channel EEG signals.

·

Session 2: Facial action videos (with 10 AUs) and the

corresponding 128-channel EEG signals.

·

Session 3: Authentic affections (meditation and pain) and the

corresponding 128-channel EEG signals.

Paper citation:

Xiaotian Li, Xiang Zhang, Huiyuan Yang, Wenna Duan, Weiying Dai, and Lijun Yin,

An EEG-Based Multi-Modal Emotion Database with Both Posed and Authentic Facial

Actions for Emotion Analysis, IEEE International Conference on Automatic Face and Gesture

Recognition (FG), 2020.

Requesting Data (BU-EEG)

With the agreement of the licensing office

of the Binghamton University, Pittsburgh University, and RPI, the database is

available for use by external parties. Due to agreements signed by the

volunteer models, a written agreement must first be signed by the recipient and

the research administration office director of your institution before the data

can be provided. Furthermore, the data will be provided to parties who are

pursuing research for non-profit use. To make a request for the data, please

contact Dr.

Lijun Yin at lijun@cs.binghamton.edu. For any profit/commercial

use of such data, please also contact Dr. Lijun Yin and Ms. Amy Breski <abreski@binghamton.edu> and <innovation@binghamton.edu> of the

Office of Technology Licensing and Innovation Partnerships.

Note: Students are not eligible to be a recipient.

If you are a student, please have your supervisor to make a request.

VII.

BP4D++ -- Second expanded multimodal facial action database

Expanding from BP4D+,

we developed an extended larger-scale multi-modal emotion database BP4D++,

which consists of 233 participants (132 females and 101 males), with ages ranging from 18 to 70 years

old. Multimodality includes synchronized

3D, 2D, thermal, physiological data sequences (e.g., heart rate, blood

pressure, skin conductance (EDA), and respiration rate), and meta-data (facial

features and partially coded FACS). Each subject has 10 tasks (emotions).

Ethnic/Racial Ancestries include Asian, Black, Hispanic/Latino, White, and

others (e.g., Native American).

Paper citation:

Xiaotian Li, Zheng Zhang, Xiang

Zhang, Taoyue Wang, Zhihua

Li, Huiyuan Yang, Umur Ciftci, Qiang Ji, Jeff Cohn,

Lijun Yin, Disagreement Matters: Exploring Internal Diversification for

Redundant Attention in Generic Facial Action Analysis, IEEE Transactions on

Affective Computing (June 2023), doi: 10.1109/TAFFC.2023.3286838.

To

make a request for the data, please contact Dr.

Lijun Yin at lijun@cs.binghamton.edu. For any

profit/commercial use of such data, please also contact Dr. Lijun Yin and Ms.

Amy Breski <abreski@binghamton.edu> and

<innovation@binghamton.edu> of the Office of Technology Licensing

and Innovation Partnerships.

VIII. ReactioNet -- Facial behavior dataset with both stimuli and

subjects

ReactioNet contains 2,486 reaction video

clips with the presence of corresponding stimuli and 1.1 million reaction

images in the wild. Each reaction video

contains well-synchronized dyadic data of both stimuli and subjects. 1566

subjects, with ages ranging from 20 to 70 years old. Ethnic ancestries include

Asian, Black, Hispanic/Latino, Indian,

Middle-Eastern, White, and others (e.g., Native American).

It provides 8 types of

stimulus scenes (including animation, film, game, object, show, sports, self-made video,

interview/public speech) and 59 types of finer-grained sub-scenes. Multi-modal

data from different domains in ReactioNet includes

visual/audio/caption from stimulus, subject, and the global view. A large set

of metadata is created, including facial landmarks, head pose tracking, gaze

tracking, FACS coding, and textual descriptions of stimuli. 50,000 key frames

are sparsely selected to generate a compact data collection for getting

high-quality annotations.

Paper citation:

Xiaotian Li, TaoyueWang, Geran Zhao, Xiang

Zhang, Xi Kang, and Lijun Yin, ReactioNet: Learning

High-order Facial Behavior from Universal Stimulus-Reaction by Dyadic Relation

Reasoning, IEEE/CVF International Conference on Computer Vision (ICCV),

October 2023.

To make a request for

the data, please contact Dr. Lijun Yin at lijun@cs.binghamton.edu.

Acknowledgement:

This material

is based upon work supported in part by the National Science Foundation under

grants IIS-0541044, IIS-0414029, IIS-1051103, CNS-1205664, and CNS-1629898. Any opinions, findings, and conclusions or

recommendations expressed in this material are those of the author and do not

necessarily reflect the views of the National Science Foundation. We would also

like to thank the support from the SUNY Research Foundation (Binghamton U),

NYSTAR’s James D. Watson Investigator Program and SUNY IITG.

![]()

![]()

![]()

![]()

Copyright @

GAIC lab, SUNY at